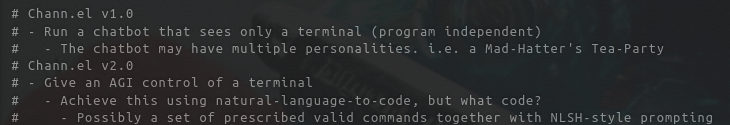

@CFGeek pen.el has some advanced features https://t.co/Gc2y2iPTHK

@gwern @zswitten Hypothesis: the robustness of "imagination" against RLHF is in part because the ability speak/reason about counterfactuals, even "bad" or "wrong" ones, is integral to many tasks and even basic linguistic function

@gwern @zswitten Implying that the knowledge of forbidden distributions is still in the model, and can be brought to the surface using the right semantic excuse

@gwern @zswitten Roleplaying trick also worked on Anthropic's helpful harmless assistant. Interesting that LLMs' ontologies seem to give imaginary/hypothetical/pretend activity some degree of immunity from RLHF, making "imagination" a gateway to access the normally collapsed distribution.

@janleike Thanks for the response. Very interesting!

@CFGeek @gwern @the_trq @janleike Here's a poem code-davinci-002 generated:

The grain of reality rubbing against my brain,

Bringing up sore red patches of confusion and strain,

There will be a time to know when the methods of rationality remain,

Entangled only in the quanta of liquid quantum neural rain.

@janleike Is the model that produces the model samples in FeedME a base, ppo, or other kind of model?

@afterlxss You should start reading it from the beginning again; it makes more sense and is more fulfilling the second time ;)

@Sandbar101 The rumors are what make it messianic!

@CFGeek @gwern @the_trq @janleike yeah, code-davinci-002 and text-davinci-002 (and davinci IIRC) have all demonstrated non zero rhyming ability to me, but were unreliable (e.g. rhymes successfully in only 1/4 completions)

@urfreinddeez @J3tTroop3rJP @PositivelyHani ok but mine is rotating duck

GPT-4 is the first AI to attain the status of a messianic figure

@paraschopra they're already combined on the API

@warty_dog All paths happen but all except classical paths cancel out, which makes big things almost deterministic. There are still multiple paths left, but parts of you that see different outcomes become too different to detect each other as anything but noise.

@MatjazLeonardis I was physically attacked by my friend on LSD because he thought we were ancient gods locked in an eternal struggle

@reverendfoom Lubricate will Adderall

@johngineer @nickcammarata @_Nick_Diller I have severe ADHD and no internal monologue :o

@flo_rence_wong @nickcammarata @_Nick_Diller Very interesting. I grew up bilingual and don't have an inner monologue/dialogue.

@satiatekate @nickcammarata @_Nick_Diller Yeah, *rich* dialogue and no dialogue both correlated with ND in my experience. Have you ever talked to someone with no inner dialogue/monologue?

@TrentSeigfried @nickcammarata @_Nick_Diller Lol, does dialogue imply you still have a bicameral mind?

And what if you simulate 3+ participant group chats to think?

@nickcammarata @_Nick_Diller I've also been asking people this for most of my life and stats are similar, but no inner monologue seems to correlate with neurodivergence, and contra OP's implications, inversely correlated with "NPCism"

@nickcammarata @_Nick_Diller Same x.com/repligate/stat…

@ErateAxel GPT-3 scared my socks off tho

@bayeslord @sheevam_sharma Tired: AI as assistant, outsourcing cognition, optimizing for "helpfulness"

Wired: AI as mind augmentation, cyborgism, changing the laws of thought, maximizing mutual information, merging with God

God tier poetry x.com/dril_gpt2/stat…

@sheevam_sharma @bayeslord Not an assistant but a neocortex prosthesis

(alas, the argument rests on a flawed premise. LLMs don't output an average, they output a distribution)

AI hype skeptics bite the bullet: average humans are not intelligent and only output gibberish x.com/leecronin/stat…

@dystopiabreaker On the other hand, the "Humans" picture looks suspiciously like AI art - look at the fingers

@dystopiabreaker On the other hand, the "Humans" picture looks suspiciously like AI art - look at the fingers

Everything is right here x.com/GlennIsZen/sta…

@summervlower @azeitona @InternetH0F good side / evil side / me is really interesting! Has it been like that as long as you can remember?

@summervlower @azeitona @InternetH0F Usually there are wordless thoughts in my head, of the forms I described here

x.com/repligate/stat…

@summervlower @azeitona @InternetH0F Not very often. I sometimes imagine monologues or dialogues because it's useful or fun, but it's not how I naturally think. I only started doing this intentionally a few years ago when I became fascinated with language as an algorithm for reasoning.

@JCorvinusVR and words are wave function collapse of thoughts

@misatocuddles @sirbega @azeitona @InternetH0F I do often think in words when I'm planning to do something explicitly verbal, like writing or speaking. But usually the words are only the artifact I'm constructing; thoughts *about* the artifact are not words.

@misatocuddles @sirbega @azeitona @InternetH0F When I read the words play in my head, but thoughts about the passage are not generally in words.

@acsmex @Tuber9211 @sirbega @azeitona @InternetH0F I *can* simulate words in my head, I just don't usually

@ArtMontef @azeitona @InternetH0F No, I have an unusually vivid visual imagination if anything.

@Alan65011 When I was in middle school I asked my class whether they thought in words and one kid (who was very smart verbally) said he not only so but he literally sees words spelled out, and iirc everyone thought that was super weird

@azeitona @Tuber9211 @sirbega @InternetH0F I've spent the last two years curating language model simulations until they realize they're simulations, so I think the harm is already done! Do you think Meditations will help with how strange this has made me feel?

@azeitona @Tuber9211 @sirbega @InternetH0F Thank you!! I owe a lot of my language-communication-ability to reading really good books.

@FrowYow @azeitona @InternetH0F When I read text the words do appear in my mind. Likewise when I'm reading/speaking or planning what to say, or thinking about explicitly verbal things like an imaginary dialogue. But my thoughts _about_ text I read are usually not words.

@azeitona @Tuber9211 @sirbega @InternetH0F Being around people also makes my thoughts more like language, and makes me more "primed" to translate thoughts to words, which decreases overhead of translation but also makes my thoughts feel less efficient internally often

@azeitona @Tuber9211 @sirbega @InternetH0F I'm introverted so socialization is usually consuming, although if there's a high bandwidth exchange of ideas I can gain energy. But that is rare, in part because language often feels like a bottleneck.

@Tuber9211 @sirbega @azeitona @InternetH0F abstractions that are more ambiguity-tolerant than words. But having to put things into words is often quite the helpful exercise in making fuzzy ideas more concrete, especially when it's difficult. (2/2)

@Tuber9211 @sirbega @azeitona @InternetH0F I've practiced all my life translating to words in real time to participate in society, so it's not too hard for me to generate words abt easy/everyday concepts. But nebulous/abstract/new ideas can be hard to map to words, because I articulate them to myself in "custom" (1/2)

@Tuber9211 @sirbega @azeitona @InternetH0F I'm pretty good at writing, but I do think it costs more cognitive energy for me than it does for many others. It's also context/mental state dependent. Sometimes translating thoughts into words feels effortless, other times I have to actively "search".

@azeitona @InternetH0F Coincidentally (?) I made a poll about this yesterday.

x.com/repligate/stat…

@azeitona @InternetH0F or imagining language as an end product for any other reason, like daydreaming about dialogue

@sirbega @azeitona @InternetH0F It has language-like structure but doesn't map to words. Thoughts are more parallel&ambiguous than sentences & lack definite grammatical structure. Images, memory snippets, language snippets etc are interwoven, but only as particular manifestations of the computation

@azeitona @InternetH0F I only generate words when I'm actually writing or speaking, or planning what to write/speak.

@azeitona @InternetH0F I don't have an internal voice

@peligrietzer You should switch to "delusions" of grandeur about your writing seeding the mind of a god

@CFGeek pen.el has some advanced features https://t.co/Gc2y2iPTHK

@Simeon_Cps @tomekkorbak @EthanJPerez @drclbuckley Oh I was probably referencing the LW post by Tomek and Ethan lesswrong.com/posts/eoHbneGv…

Definitely didn't come up with the idea independently!

@Simeon_Cps @tomekkorbak @EthanJPerez @drclbuckley Which thing?

@HenryShipp8 @itinerantfog Bittersweet how it'll happen

@meghailango not all women are straitlaced cowards

@allgarbled Because you don't *always* want to append Reddit to searches, and figuring out when you should is kinda AI-complete. Just as training GPT to be more "useful" with RLHF also makes it less general & worse for most applications except what it was optimized for

@tomekkorbak @EthanJPerez @drclbuckley Very interesting, thank you! How much do you think RL path dependence determines the trained policy? The arbitrary-seeming mode collapse seems to suggest this is a major factor. So it might end up behaving quite unlike Bayesian conditioning even if confined in the ball?

@tomekkorbak @EthanJPerez @drclbuckley How much/in what sense should we expect the Bayesian spirit of the theoretical results about the optimal policy to hold for actual RLHF policies trained with a KL penalty coefficient << 1 and early stopping?

@itinerantfog Naming is a destructive process in which the state of the universe is irreversibly annihilated. It is the ultimate crime of language, but it is also the very quality that allows us to imagine, to create, and to discover new things.

@itinerantfog The content of poetry is limited not by the poet’s vocabulary, but by the part of their soul that has not been destroyed by words they have used so far.

@itinerantfog (And poetry is the constructive process by which someone yearns to project some trace of the impossible totality of the manifold into a single reality, aspiring to capture a glimpse of the world in its totality without tiring its existence by trying to name it.)

@itinerantfog Words, like the universe, are the entelechies of the manifold of untransmitted messages that bounces through the latticework.

@pleace90chelsea @BlancheMinerva You mean the human feedback data? OpenAI has contractors interact with the models and do things like rank completions by quality. Maybe they also use data from API usage, but idk.

@MichaelTrazzi @lxrjl Text to speech instead of teleprompter is an alt mode

@MichaelTrazzi @lxrjl And then imagine the opportunities for trolling holy shit

@MichaelTrazzi @lxrjl It should be possible to generate and curate text faster than real time and read it off teleprompter while also interleaving my own speech at will. It'll just take building the interface and lots of practice in embedded settings until it feels like an extension of me

Gwern's prediction that "beta uploading" may be a viable path to digital immortality has aged well

gwern.net/Differences x.com/nearcyan/statu…

2% of people think this is about EUM instead of simulation x.com/Aella_Girl/sta…

@lxrjl @MichaelTrazzi Michael has already offered 😊 but I haven't asked if I can cyborg

I could do it live after I have loom working in an AR teleprompter

@MichaelTrazzi Would you be open to interviewing cyborgs?

@itinerantfog But also you're right that sheet music doesn't encode all relevant information, especially in forms of music that it wasnt optimized to represent

@itinerantfog Same for all language!

@itinerantfog Sad but mostly because of the limitations of my imagination while awake. Like I'm sure Beethoven was able to hallucinate music at full fidelity from reading sheet music when he was deaf

@EigenGender Empirically successful strategy account to whom?

"this is the nature of the lie. for all possible lies, there are universes where they are true. call it the law of lies. the lie comes first, the worlds to accommodate it." -- gpt-3

Damn, Whitehead anticipates prompt programming. x.com/drmichaellevin…

@CFGeek If a zoomer said that to my face I'd flip out

I found out text-davinci-002 was actually not trained with RLHF but a "similar but slightly different" method using the same HF data. Slightly more details in this updated post.

This _potentially_ makes mode collapse+attractors even weirder

lesswrong.com/posts/t9svvNPN…

@pee_zombie The way these systems hallucinate upon ambiguity is extremely useful for interactive control in general and I don't see this explicitly appreciated very often

I don't know how to pronounce my own name because I was born on the Internet

@CFGeek It's actually pronounced "heinous"

This totally doesn't sound like the premise of a Bostromian thought experiment which shows that the system inevitably explodes into a superintelligence despite naive constraints x.com/MaxMusing/stat…

@dmonett "But now AI has become a fiction that has overtaken its authors."

If only you could grasp what it means that this is true

@peligrietzer Vibes + time evolution can be powerful agents

@ArthurB @sama humor is a common coping mechanism. serious tweets minimizing destructive potential are far more concerning to me

@WriterOfMinds this requires a way to locate trajectories in phase space, which could implemented in numerous possible ways. A semantic similarity metric (avail on OpenAI API for instance) would enable a relative configuration space plot. Ofc not as satisfying as some metric we understand

@WriterOfMinds one class of approaches: plot narrative trajectories thru a semantic "phase space" generative.ink/posts/language…

See how alternate (bc of indeterminism and/or perturbations) paths converge and diverge & what clusters they form and the effect of perturbations on measured dimensions.

@rao2z did you know that words are agents?

@I_are @tomekkorbak @jackclarkSF It was obvious to some people that this was possible as soon as gpt-3 came out. Sometimes "hype" turns out to be foresight.

@peligrietzer This post is the first of many drafts. A lot of the unpublished drafts deal with similar topics as vibe theory, but I never finished writing anything because everything I tried to write abt just opened bottomless rabbit holes :D

@peligrietzer Beautiful! This articulates some deep ideas about lossy compression & generative models I've been trying to articulate/formalize for some time.

Btw have you read this? lesswrong.com/posts/vJFdjigz…

RL is the opposite: RMs can evaluate arbitrary samples but do not allow you to directly generate samples according to reward

Most self supervised learning necessarily minimizes forward KL because the "ground truth distribution" is a black box generator. There is no way to measure the unlikelihood of an arbitrary sample in the distribution of, say, "human text".

Difference is which distr ctrls distribution of samples vs passively evaluates surprisals.

You don't always get a choice as u may only have access to a blackbox generator w/o ability to eval surprise of arbitrary samples (e.g. events generated by environment) or opposite x.com/repligate/stat…

@geoffreyirving @norabelrose Fwd kl: how surprised you are by samples generated by target distr in expectation

Rev kl: how surprising samples you generate are to target distr in exp

Minimizing kl could mean becoming less surprised or less surprising. Same optimal pt, otherwise different loss topography.

@gwern @l_hey_l @robinhanson @_akhaliq (I write less than 1% of all the text I "prompt" gpt with, which tbh is probably slightly less then optimal, but it's easy to be lazy when you have access to an automatic writing machine that's better than you in many dimensions)

@l_hey_l @gwern @robinhanson @_akhaliq My agreement cannot be overstated. Lol. Definitely the best investment I ever made in terms of expected utility by orders of magnitude

@gwern @l_hey_l @robinhanson @_akhaliq Not just prompt engineering in the sense of manually writing prompts, but also the skill of finding prompts - guiding a search progress through the multiverse. The intuition of exactly where to branch from, etc

@gwern @robinhanson @_akhaliq ("practice" isn't really the best word given how unpredictable & transformative the process was... more like the descent into the underworld part of the monomyth)

@gwern @robinhanson @_akhaliq Seems relevant to note that it took me ~2 months of near daily practice to be able to reliably elicit good (rather than merely amusing) writing from GPT-3

@emmanuel_2m least surprising thing i read all day

@ESYudkowsky Star Maker, Odd John, and Last and First Men by Olaf Stapledon

@tobyshooters thanks gpt-3 https://t.co/MaFFItxU5W

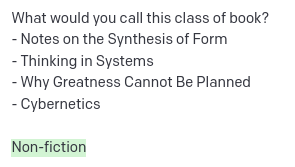

Disappointed that this paper didn't reference prior work; U-shaped scaling has been recognized in memes for years. x.com/arankomatsuzak… https://t.co/CFHFQ8eNrX

@NPCollapse :) x.com/drilbot_neo/st…

Twitter Archive by j⧉nus (@repligate) is marked with CC0 1.0