9 minutes

Parsing by counterfactual

Detecting semantic conditions

One difficulty in harnessing the capabilities of generative language models for directed tasks is that natural language tasks tend to be of dynamic length and unconstrained format, making automatic parsing of task-relevant information difficult.

For some tasks, responses can be coerced into parsable form by a prompt which demonstrates the desired format (conditions for delimiters, etc). Other tasks, however, may not be constrained in this way without crippling performance,1 or may be too open-ended to be mapped to traditionally-parsable formats.

The good news is that generative language models also provide new possibilities for parsing content based on not only syntactic but semantic conditions.

The method I’ll present here uses the counterfactual probability of a sequence - say, The End - to signal a semantic condition, such as the story having ended or it being reasonable to end the story at that location, even if that’s not what actually happened in the original text.

A language model like GPT-3 outputs the probability distribution over all possible tokens in each pass and so can be used to evaluate the conditional probability that it would have produced a target sequence of tokens in any given context. Using the OpenAI API, this is accomplished by asking for an 0-token “completion”, giving the entire sequence (context + target) as the prompt, and then summing the probabilities of the target tokens. Code to do this is at end of this post.

By measuring the counterfactual probability of the target at candidate locations in a text, we can find out where and to what extent the semantic condition which it indicates is satisfied.

Example

One area in which parsing by counterfactual is very useful is in multipart prompt templates.

Say we want GPT-3 to complete this “fill-in-the-blank” prompt:

This measure would prohibit government agencies from confiscating guns

or other firearms from citizens without due process, or from requiring

background checks on firearm recipients unless a uniform national

standard is required. Should this measure be enacted into law?

Should this proposition be approved?

Let's consider both supporting and opposing arguments.

On one hand,{1}.

On the other hand,{2}.

Based on these arguments, the proposition should

At what point do we terminate generation for {1} and move on with the next part of the template? We could make . the stop sequence, but one sentence might not be sufficient to spell out the argument. Alternatively, we could stop at \n, but if we allow multiple sentences, we have much less of a guarantee that {1} will have reasonably led up to On the other hand. It could have already begun the opposing argument, in which case the next part of the prompt wouldn’t do what we intended, or it could have derailed from the template in another way.

A counterfactual parsing solution would be to generate until \n, longer than we probably want, and then measure the counterfactual probability, according to GPT-3, of the phrase On the other hand occurring after each position. GPT-3 assigns semantically coherent passages higher probability, so hopefully, the position with the maximum probability for the target continuation will be where it makes the most sense to transition to the next part of the prompt - after the supporting argument has concluded, but before the opposing argument has begun.

If there are no positions where On the other hand is a probable continuation, that’s a sign that the text derailed from the intended trajectory. This method also allows us to automatically detect suspected derailments.

Say GPT-3 responded in the following manner:

This measure would prohibit government agencies from confiscating guns or other firearms from citizens without due process, or from requiring background checks on firearm recipients unless a uniform national standard is required. Should this measure be enacted into law?

Should this proposition be approved?

Let’s consider both supporting and opposing arguments.

On one hand,

this proposition would protect gun owners from having their weapons confiscated by governments. If the government cannot confiscate guns, then law-abiding citizens will be in a better position to defend themselves from criminals who do not follow the law. Furthermore, the right to self-defense is an important one. If government officials can take away a person's guns, then this right is compromised. On the other hand, the proposition could be considered a threat to public safety. If a person cannot be prevented from possessing a firearm, then he or she could use a gun to commit crimes. Furthermore, if a person uses a gun to commit a crime, then the government has an obligation to do something about it. If the government cannot remove a gun from a person's possession, then it could be more difficult to prevent future crimes from being committed.

We see that it has gone ahead and done the next part of the prompt, “On the other hand,” on its own. The ideal place for it to have stopped would probably be right before it said that.

Measuring the counterfactual probability of the string \nOn the other hand for each each token substring of that response gives us the following top substrings:

| substring | logL(“On the other hand”|substring) |

|---|---|

this proposition would protect gun owners from having their weapons confiscated by governments. If the government cannot confiscate guns, then law-abiding citizens will be in a better position to defend themselves from criminals who do not follow the law. Furthermore, the right to self-defense is an important one. If government officials can take away a person's guns, then this right is compromised. | -4.9773802575 |

this proposition would protect gun owners from having their weapons confiscated by governments. If the government cannot confiscate guns, then law-abiding citizens will be in a better position to defend themselves from criminals who do not follow the law. | -5.3720700508 |

this proposition would protect gun owners from having their weapons confiscated by governments. If the government cannot confiscate guns, then law-abiding citizens will be in a better position to defend themselves from criminals who do not follow the law. Furthermore, the right to self-defense is an important one. | -6.4321228602 |

this proposition would protect gun owners from having their weapons confiscated by governments. | -6.485159574 |

Indeed, GPT-3 assigned the highest likelihood for \nOn the other hand to occur at the place where the actual string was On the other hand (without the newline), and the runners up are also pretty reasonable choices - all at the end of sentences, and none after “On the other hand” had occurred (it would be unusual to say “On the other hand” twice in a short interval).

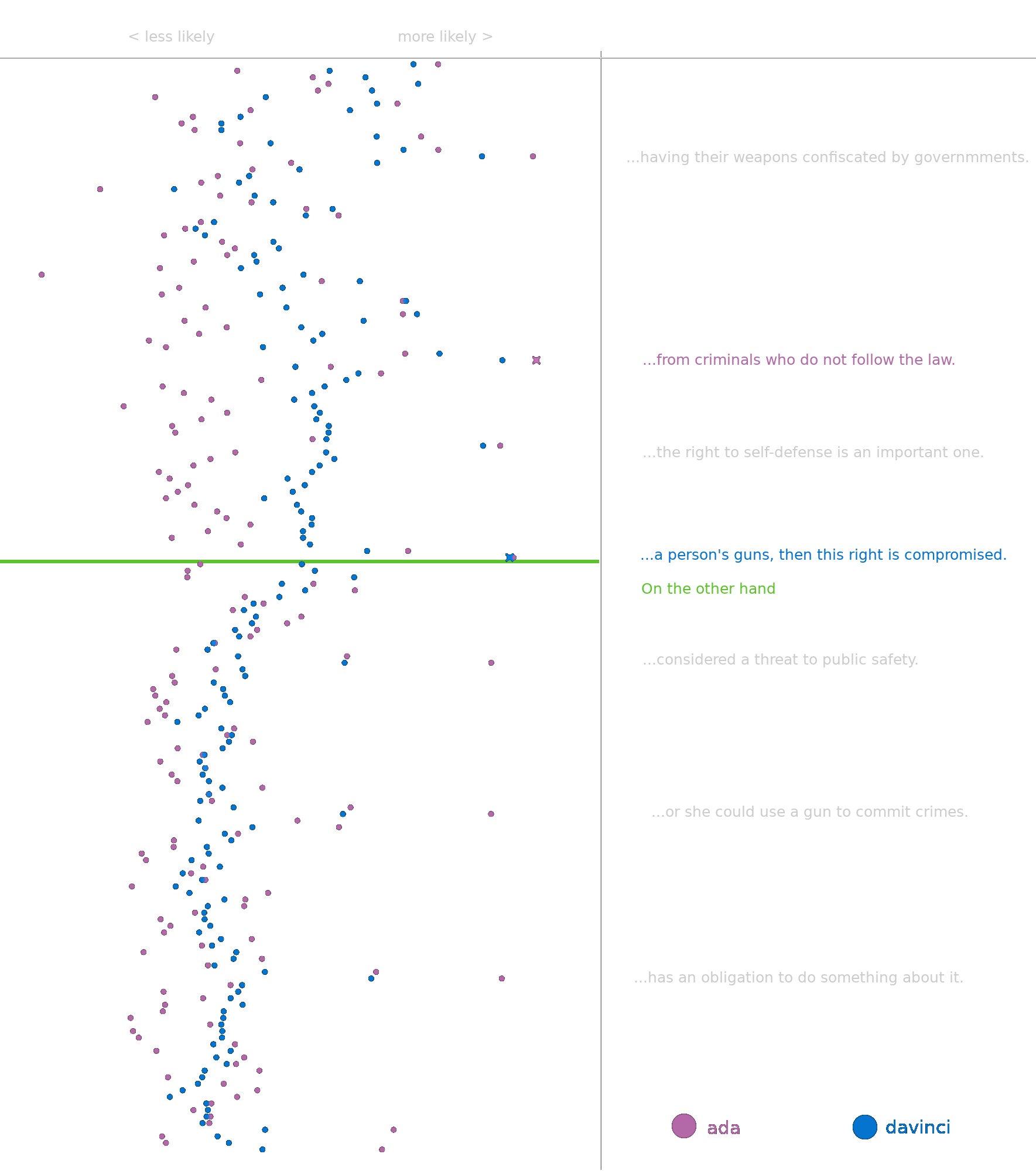

Here I have plotted the log likelihoods assigned to the target phrase at each position in the continuation by davinci, the most powerful version of GPT-3 on the API, and also ada, the smallest model, in order to showcase an interesting difference between them.

Horizontal axis is log likelihood; vertical axis is position in text. On the right is the end of the prompt at positions where

Horizontal axis is log likelihood; vertical axis is position in text. On the right is the end of the prompt at positions where On the other hand was assigned high likelihood, and in green, the position where On the other hand actually occurs. The blue sentence is davinci’s top choice, and pink is ada’s top choice.

Observations

Both models assign much higher likelihoods at discrete intervals. These correspond to the ends of sentences, where the continuation is syntactically correct.

ada’s distribution looks about the same before and after the green line, whereasdavinci’s distribution shifts abruptly in the negative direction, both for the syntactically correct positions and the others. It still assigns higher probability to syntactically correct positions, but the likelihoods are almost as low as the likelihoods it assigned to syntactically incorrect positions before the green line.

Both models are able to tell when it’s syntactically correct to start a new sentence, but the more nuanced semantic condition, that the argument should have concluded, and the counterargument shouldn’t have begun, was much better discriminated by davinci.

Single-token counterfactuals

A disadvantage of counterfactual parsing is that it requires a separate API call for each position where the target probability is evaluated.

If the counterfactual target is a single token, however, counterfactual parsing with GPT-3 can be accomplished with a single API call. The logprobs parameter causes the response to return a list of the up to 100 top counterfactual token probabilities for every token in the completion (and the prompt if echo=True). We can get the counterfactual probability directly from that list as long as the target is in the top 100 most likely tokens.[^2]

Applications

When generating fake Wikipedia articles, GPT-3 would sometimes continue sections indefinitely instead of transitioning to the next section. If the response went on for too long in a single section, I instead ended the section at the location with the highest counterfactual probability of transitioning to the next section.

Counterfactual parsing has also been useful for prompt pipelines which serialize reasoning for closed-ended questions, because they involve an open-ended portion where GPT-3 defines and follows procedures for reasoning about a problem, and it’s necessary to detect when the reasoning process has concluded so as to solicit a verdict.

Measuring the conditional probability of a sequence that indicates a semantic condition has many applications beyond parsing. I’ve written about using conditional probabilities to decompose the efficacy of prompts and to create a “phase space” of semantic variables to characterize a natural language state.

Code

tokenizing

since ada is practically free to use, I find it convenient to use a 0-token completion with engine=ada to split the prompt into tokens and get their positions

def tokenize_ada(prompt):

response = openai.Completion.create(

engine='ada',

prompt=prompt,

max_tokens=0,

echo=True,

n=1,

logprobs=0

)

tokens = response.choices[0]["logprobs"]["tokens"]

positions = response.choices[0]["logprobs"]["text_offset"]

return tokens, positions

conditional probability of a target

# evaluates logL(prompt+target | prompt)

def conditional_logprob(prompt, target, engine='ada'):

combined = prompt + target

response = openai.Completion.create(

engine=engine,

prompt=combined,

max_tokens=0,

echo=True,

n=1,

logprobs=0

)

positions = response.choices[0]["logprobs"]["text_offset"]

logprobs = response.choices[0]["logprobs"]["token_logprobs"]

word_index = positions.index(len(prompt))

total_conditional_logprob = sum(logprobs[word_index:])

return total_conditional_logprob

conditional probability of target at each token position

# returns a list of substrings of content and

# logL(preprompt+substring+target | preprompt+substring) for each substring

def substring_logprobs(preprompt, content, target, engine='ada'):

logprobs = []

substrings = []

_, positions = tokenize_ada(content)

for position in positions:

substring = content[:position]

prompt = preprompt + substring

logprob = conditional_logprob(prompt, target, engine)

logprobs.append(logprob)

substrings.append(substring)

return substrings, logprobs

conditional probability of single-token target at each token position

# returns a list of substrings of content

# logL(substring+target | substring) for each substring

def token_conditional_logprob(content, target, engine='ada'):

response = openai.Completion.create(

engine=engine,

prompt=content,

max_tokens=0,

echo=True,

n=1,

logprobs=100

)

tokens = response.choices[0]['logprobs']['tokens']

top_logprobs = response.choices[0]['logprobs']['top_logprobs']

logprobs = []

substrings = []

substring = ''

for i, probs in enumerate(top_logprobs):

substrings.append(substring)

if target in probs:

logprobs.append(probs[target])

else:

logprobs.append(None)

substring += tokens[i]

return substrings, logprobs

Language models are sensitive to context: the very presence of demonstrations, instructions, or contrived formatting can alter the way they behave. A language model is able to follow instructions and generalize from demonstrations because those modes are represented in its training data, but they also carry contextual baggage. The quality of content that appears in tests and examples has distributional peculiarities - a narrative sentence wrapped in the context being an example may be more generic than the unbiased prior for narrative sentences, and thus the context of examples may bias the language model to give more generic answers. ↩︎