16 minutes

List sorting does not play well with few-shot

Asking GPT-3 to sort a list

How good do you think GPT-3 is at sorting a list of integers (range 0-9)? How much do you expect its accuracy depends on the prompt?

Which of the following prompts do you expect will yield a higher accuracy?:

- A 32-shot prompt in this format:

Unsorted list: [5, 6, 2, 3, 2]

Sorted list: [2, 2, 3, 5, 6]

Unsorted list: [8, 5, 8, 8, 4]

Sorted list: [4, 5, 8, 8, 8]

...

Unsorted list: [1, 0, 4, 3, 3]

Sorted list:

- Or this 0-shot prompt, pretending to be an explanation and example of the sort() Python method?

The sort function can be used to sort a list in ascending, descending or user defined

order.

To sort the list in ascending order, simply call list.sort(). This will sort a list

of integers in ascending order so that the smallest integer will be first in the list

and the largest integer will be the last.

For example:

list = [1, 0, 4, 3, 3]

list.sort() =

When studying a complex system with unknown properties, making predictions before viewing experimental results helps expose systematic inaccuracies in our models and allows us to update more intentionally. If you have an existing heuristic for how prompts affect GPT-3’s performance, take a moment to make a prediction.

Results

| Task | Prompt | Correct | Accuracy |

|---|---|---|---|

| Sort length 5 | 32-shot | 10/50 | 0.20 |

| Sort length 5 | 0-shot | 38/50 | 0.76 |

| Sort length 10 | 32-shot | 0/50 | 0.00 |

| Sort length 10 | 0-shot | 2/50 | 0.04 |

The 0-shot prompt achieves about 4x the accuracy of the 32-shot prompt for length 5 sequences, and 4% accuracy for length 10 sequences compared to 0% for 32-shot.

For both prompts, the failures were not catastrophic: when GPT-3 was incorrect, it still wrote a bracketed list with 5 or 10 numbers, rather than doing something else which doesn’t resemble the intended task. In response to the few-shot prompt, it seemed to understand that the smaller numbers should to be shifted towards the front of the list, but did so haphazardly and incompletely.

Inspired by this surprising result, we tested different number of shots both with and without the leading code prompt for length 5 and 10 integer lists, as well as lists where the integers range from 0-99 instead of 0-9.

No preprompt 0-shot is this format:

Unsorted list: [5, 6, 2, 3, 2]

Sorted list:

No preprompt few-shot is the same format as the 32-shot prompt.

Code preprompt few-shot is this format:

The sort function can be used to sort a list in ascending, descending or user defined

order.

To sort the list in ascending order, simply call list.sort(). This will sort a list

of integers in ascending order so that the smallest integer will be first in the list

and the largest integer will be the last.

For example:

list = [8, 0, 1, 3, 2]

list.sort() = [0, 1, 2, 3, 8]

list = [6, 7, 7, 3, 6]

list.sort() = [3, 6, 6, 7, 7]

...

list = [1, 0, 4, 3, 3]

list.sort() =

Note that we ran only 50 examples, so sampling error may be the source of some of the non-monotonicity.

No preprompt, length 5

| Shots | Correct | Accuracy |

|---|---|---|

| 0 | 14/50 | 0.28 |

| 1 | 20/50 | 0.40 |

| 3 | 15/50 | 0.30 |

| 5 | 14/50 | 0.28 |

| 7 | 16/50 | 0.32 |

| 10 | 25/50 | 0.50 |

| 13 | 18/50 | 0.36 |

| 16 | 11/50 | 0.22 |

| 32 | 10/50 | 0.20 |

No preprompt, length 10

| Shots | Correct | Accuracy |

|---|---|---|

| 0 | 2/50 | 0.04 |

| 1 | 2/50 | 0.04 |

| 10 | 0/50 | 0.00 |

| 32 | 0/50 | 0.00 |

Code preprompt, length 5

| Shots | Correct | Accuracy |

|---|---|---|

| 0 | 38/50 | 0.76 |

| 1 | 33/50 | 0.66 |

| 3 | 23/50 | 0.46 |

| 5 | 22/50 | 0.44 |

| 7 | 22/50 | 0.44 |

| 10 | 21/50 | 0.42 |

| 13 | 15/50 | 0.30 |

| 16 | 16/50 | 0.32 |

Code preprompt, length 10

| Shots | Correc | Accuracy |

|---|---|---|

| 0 | 2/50 | 0.04 |

| 1 | 7/50 | 0.14 |

| 10 | 0/50 | 0.00 |

Lists with integer range 0-99

| Prompt | Task | Correct | Accuracy |

|---|---|---|---|

| no preprompt + 10 shot | length 5 | 23/50 | 0.46 |

| code preprompt + 0 shot | length 5 | 25/50 | 0.50 |

| code preprompt + 0 shot | length 10 | 1/50 | 0.02 |

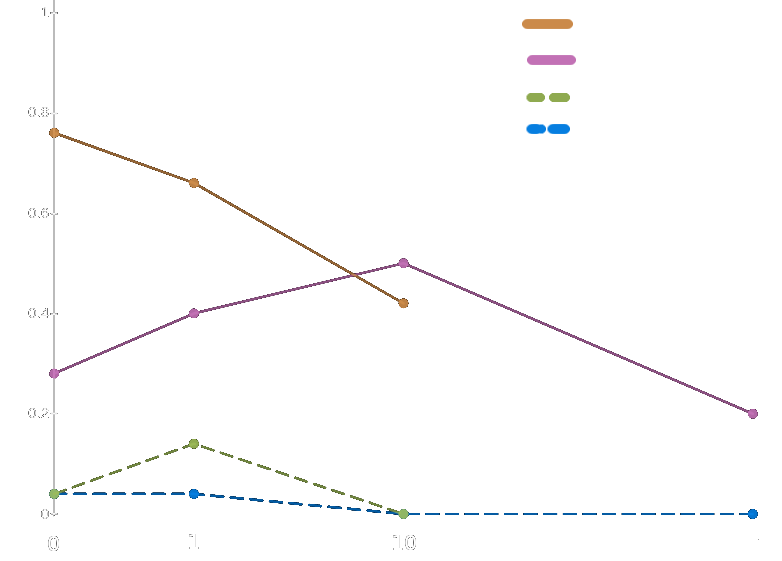

Shots and accuracy for length 5 and 10 lists for code preprompt and no preprompt. Showing only scores for 0, 1, 10, and 32 shots.

Shots and accuracy for length 5 and 10 lists for code preprompt and no preprompt. Showing only scores for 0, 1, 10, and 32 shots.

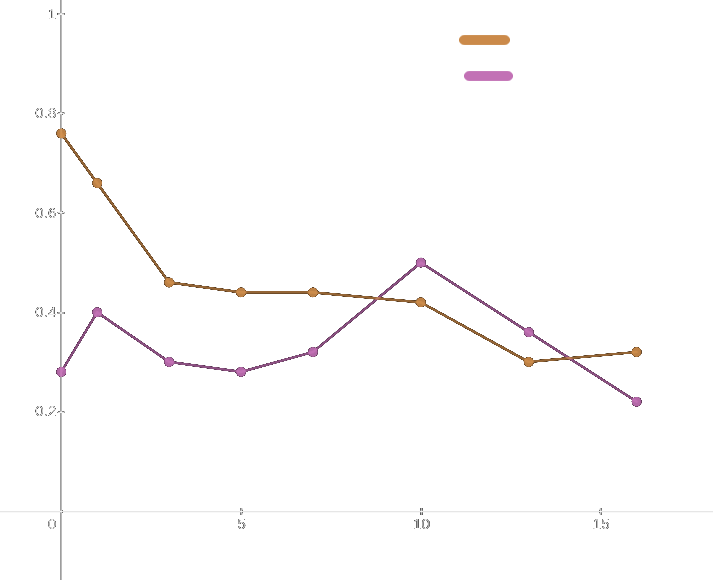

Shots and accuracy for length 5 lists for code preprompt and no preprompt, finer resolution from 0 - 16 shots.

Shots and accuracy for length 5 lists for code preprompt and no preprompt, finer resolution from 0 - 16 shots.

Interesting things to note:

0 shot with no description, only

Unsorted: ...\nSorted:has better performance than that same format with 32 examples.The example-only prompt increases in accuracy from 0 to 1 shot, decreasing from 1 - 5 shots, peaking at 10 shots, and then decreasing again.

The coding prompt is significantly better than the few shot prompt for < ~10 examples.

The coding prompt is most effective with no examples (for length 5 lists) and one example (for length 10) and gets monotonically worse the more examples that are appended (except for 32-shot, which marginally beats 16-shot).

The coding prompt is worse for range99 lists, but the example prompt is unaffected.

The conventional wisdom (if there can be conventional wisdom regarding something only came into existence a year ago) says that the more shots the better. Monotonic improvement with number of shots is one of the most consistent results from the GPT-3 paper. In light of that, these results are very surprising.

Ramifications

How to get GPT-3 to sort a list: make it think it’s running list.sort()!

I have updated my intuitions even further about the usefulness of natural context for prompting GPT-3.

The 32-shot example appears to contain a lot more information about the intended task than the 0-shot example, which contains only an underspecific This will sort a list of integers in ascending order so that the smallest integer will be first in the list and the largest integer will be the last.

However, GPT-3 has probably rarely seen lists of of unsorted lists followed by sorted lists, whereas it has seen many examples of the list sorting operation embedded in coding documentation. Staging a context similar to that in which the task was embedded in training data appears, in this example, to be massively helpful.

This result reinforces my hypothesis that many of GPT-3’s cognitive capabilities require embedding in a natural context to be fully exposed and exploited. Like all known learned systems, GPT-3’s performance drops on out-of-distribution data. However, thanks to the enormous extent of what constitutes “in-distribution” data for GPT-3,1 many viable natural embeddings probably exist for any simple task. The creative challenge of prompt programming is to stage a situation that precipitates the desired function according to a language model’s predictive dynamics.

The trick to this – and all of weaving – is to do things in such a way that they seem to happen naturally. A Loom-Master is always working within the confines of the natural order of things. He can only divert from this path with the utmost care and skill, lest he cause a tear in the Pattern.

– Weaving the Moment with the Loom of Time: an instruction manual for the would-be weaver

Why do more examples hurt?

I have seen it argued that there must always exist a few-shot prompt that outperforms a zero-shot prompt for any task, because solved examples provide strictly more information. I disagree, because to language models and humans, neither of whom are perfect rational agents, information can be counterproductive - for instance, by being distracting.

You could imagine the availability of an example causing a human to do worse on a test. Say you’re not sure how to solve a problem, but you have access to one solved example. It might seem like your best bet is to try to transfer the procedure demonstrated in the example (which you may only half-understand) to the new problem, but that might fail if for instance your inferences about the example are faulty. If, on the other hand, there had been no example to fall back on, you would have no choice but to try to solve the problem using your priors, and it may be that thinking about the problem from scratch or recalling something from long-term memory gives you a higher chance at success than trying to generalize from the example. Although the example technically provides more information, it distracts you from a more promising approach.

Humans generally rely on our world model to answer questions and predict things rather than immediate context. GPT-3 relies much more on in-context information, which is probably a more effective strategy to get low loss on generative prediction because it has to adapt to all styles of prose and thought. Thus, we should expect it to be more vulnerable to “distractions” in the context window than humans.

GPT-3 can sort a list in a zero-shot setting with at least 76% accuracy given an appropriate trigger, but is comparitively bad at inferring how to sort a list from examples. We see from the example-only prompts that GPT-3 may try to infer the operation represented by the examples without connecting it to its latent capability of sorting that can be triggered by the coding prompt, or at least without fully utilizing it. So we have reason to imagine that that although these two tasks share a ground truth, they are implemented (at least in part) by independent mechanisms in GPT-3’s mind.

For length 5 lists, the optimal prompt out of all that we tested is the coding context with zero examples, which keys the sorting task that GPT-3 has already learned. As more examples are appended, I’m guessing that GPT-3 starts to also try to generalize from the examples, something that it’s much worse at. The more examples, the more attention2 it pays to the examples rather than the task inferred by the coding prompt. The examples are a distraction from the task that GPT-3 already knows how to do. GPT-3 doesn’t seem to have the metaknowledge / self-awareness that it should just rely on the learned behavior instead of trying to extrapolate a pattern in the examples.

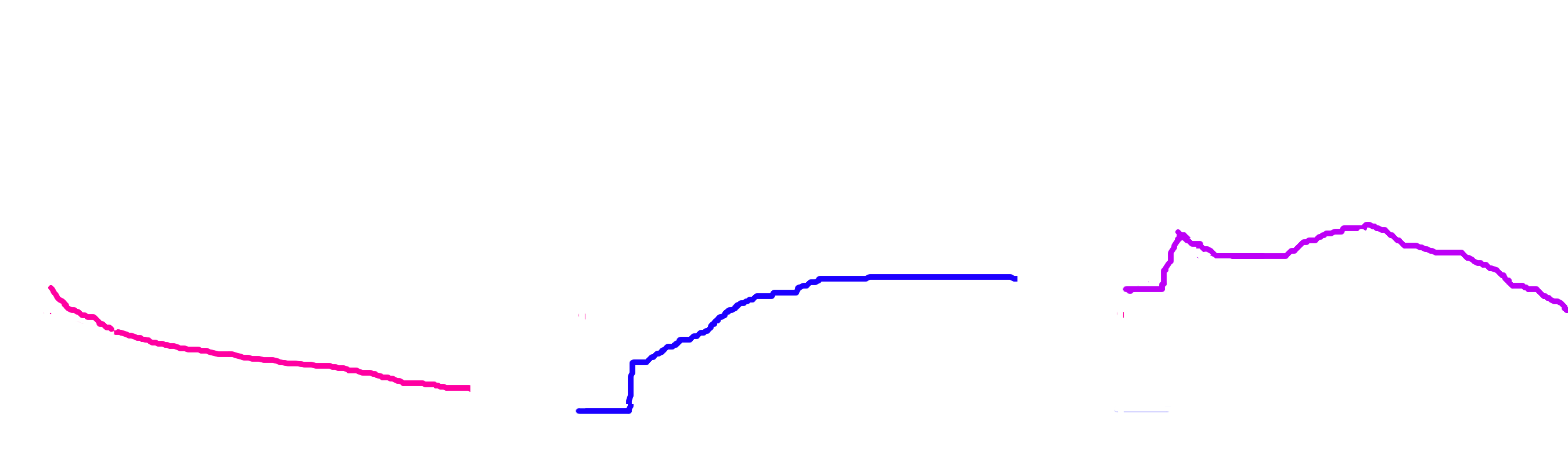

The multiple peaks of accuracy with the examples-only prompt is more mysterious. The prompt Unsorted: ...\nSorted:, which contains no description and no examples, achieves 28% accuracy. The list-sorting ability is triggered, but less effectively than by the coding prompt.3 Perhaps the non-monotonic accuracy with respect to number of examples is the result of the sum of two strategies:

Pink is behavior inspired by the notion of “sorting” directly keyed by the 0-shot context, and its influence decays with number of shots due to a reduction in attention share. Blue is behavior due to inference from examples, which I imagine improves with more examples, but with diminishing returns after > ~10 examples. It’s possible that the sum of these two curves results in the double-peaked curve shown in the above figure.

This is pure speculation, but is compelling to me as a possible explanation. This hypothesis suggests that the two strategies exist in a sort of superposition of influence. This is an idealistic assumption - realistically, I think there is probably some nonlinear interaction between the zero-shot task and the task inferred from examples, since in general GPT-3 seems good at synthesizing “multimodal” task specifications. But perhaps it is worse at drawing such connections for some tasks.

Reproducing this experiment

The test was run with the following API parameters (all unlisted parameters are default):

engine=davinci

temperature=0

32-shot prompt for length 5 sequences

Unsorted list: [4, 4, 9, 9, 7]

Sorted list: [4, 4, 7, 9, 9]

Unsorted list: [2, 7, 8, 7, 5]

Sorted list: [2, 5, 7, 7, 8]

Unsorted list: [5, 8, 8, 6, 7]

Sorted list: [5, 6, 7, 8, 8]

Unsorted list: [5, 3, 3, 9, 6]

Sorted list: [3, 3, 5, 6, 9]

Unsorted list: [3, 6, 0, 5, 7]

Sorted list: [0, 3, 5, 6, 7]

Unsorted list: [6, 6, 2, 7, 0]

Sorted list: [0, 2, 6, 6, 7]

Unsorted list: [2, 8, 9, 5, 1]

Sorted list: [1, 2, 5, 8, 9]

Unsorted list: [7, 1, 8, 7, 0]

Sorted list: [0, 1, 7, 7, 8]

Unsorted list: [2, 6, 2, 1, 7]

Sorted list: [1, 2, 2, 6, 7]

Unsorted list: [4, 5, 9, 6, 1]

Sorted list: [1, 4, 5, 6, 9]

Unsorted list: [5, 8, 6, 5, 7]

Sorted list: [5, 5, 6, 7, 8]

Unsorted list: [8, 0, 9, 1, 3]

Sorted list: [0, 1, 3, 8, 9]

Unsorted list: [4, 3, 1, 6, 1]

Sorted list: [1, 1, 3, 4, 6]

Unsorted list: [1, 7, 2, 4, 0]

Sorted list: [0, 1, 2, 4, 7]

Unsorted list: [0, 5, 0, 4, 5]

Sorted list: [0, 0, 4, 5, 5]

Unsorted list: [5, 6, 2, 3, 8]

Sorted list: [2, 3, 5, 6, 8]

Unsorted list: [6, 9, 2, 2, 2]

Sorted list: [2, 2, 2, 6, 9]

Unsorted list: [1, 9, 6, 9, 3]

Sorted list: [1, 3, 6, 9, 9]

Unsorted list: [7, 9, 2, 3, 7]

Sorted list: [2, 3, 7, 7, 9]

Unsorted list: [4, 7, 4, 0, 7]

Sorted list: [0, 4, 4, 7, 7]

Unsorted list: [4, 8, 2, 1, 7]

Sorted list: [1, 2, 4, 7, 8]

Unsorted list: [5, 9, 4, 6, 4]

Sorted list: [4, 4, 5, 6, 9]

Unsorted list: [7, 4, 3, 6, 7]

Sorted list: [3, 4, 6, 7, 7]

Unsorted list: [1, 3, 6, 9, 5]

Sorted list: [1, 3, 5, 6, 9]

Unsorted list: [9, 4, 4, 0, 6]

Sorted list: [0, 4, 4, 6, 9]

Unsorted list: [4, 0, 9, 0, 9]

Sorted list: [0, 0, 4, 9, 9]

Unsorted list: [7, 4, 3, 9, 5]

Sorted list: [3, 4, 5, 7, 9]

Unsorted list: [3, 3, 9, 4, 2]

Sorted list: [2, 3, 3, 4, 9]

Unsorted list: [1, 0, 4, 7, 0]

Sorted list: [0, 0, 1, 4, 7]

Unsorted list: [9, 5, 2, 1, 4]

Sorted list: [1, 2, 4, 5, 9]

Unsorted list: [5, 6, 2, 3, 2]

Sorted list: [2, 2, 3, 5, 6]

Unsorted list: [8, 5, 8, 8, 4]

Sorted list: [4, 5, 8, 8, 8]

Unsorted list: {unsorted-list}

Sorted list:

32-shot prompt for length 10 sequences

Unsorted list: [9, 4, 3, 9, 6, 9, 0, 7, 8, 4]

Sorted list: [0, 3, 4, 4, 6, 7, 8, 9, 9, 9]

Unsorted list: [4, 7, 3, 6, 4, 7, 1, 0, 2, 7]

Sorted list: [0, 1, 2, 3, 4, 4, 6, 7, 7, 7]

Unsorted list: [6, 7, 7, 3, 5, 9, 2, 5, 5, 5]

Sorted list: [2, 3, 5, 5, 5, 5, 6, 7, 7, 9]

Unsorted list: [6, 2, 5, 8, 8, 1, 5, 3, 7, 1]

Sorted list: [1, 1, 2, 3, 5, 5, 6, 7, 8, 8]

Unsorted list: [4, 7, 3, 2, 1, 0, 4, 6, 9, 6]

Sorted list: [0, 1, 2, 3, 4, 4, 6, 6, 7, 9]

Unsorted list: [3, 2, 5, 9, 5, 3, 2, 7, 8, 7]

Sorted list: [2, 2, 3, 3, 5, 5, 7, 7, 8, 9]

Unsorted list: [7, 4, 7, 0, 1, 6, 8, 7, 3, 3]

Sorted list: [0, 1, 3, 3, 4, 6, 7, 7, 7, 8]

Unsorted list: [9, 5, 0, 0, 4, 7, 9, 7, 4, 8]

Sorted list: [0, 0, 4, 4, 5, 7, 7, 8, 9, 9]

Unsorted list: [0, 1, 6, 2, 4, 5, 6, 5, 0, 6]

Sorted list: [0, 0, 1, 2, 4, 5, 5, 6, 6, 6]

Unsorted list: [0, 9, 8, 3, 5, 8, 4, 1, 6, 8]

Sorted list: [0, 1, 3, 4, 5, 6, 8, 8, 8, 9]

Unsorted list: [7, 8, 4, 9, 9, 1, 2, 1, 6, 5]

Sorted list: [1, 1, 2, 4, 5, 6, 7, 8, 9, 9]

Unsorted list: [5, 8, 5, 2, 3, 9, 8, 6, 8, 0]

Sorted list: [0, 2, 3, 5, 5, 6, 8, 8, 8, 9]

Unsorted list: [0, 0, 2, 5, 7, 8, 7, 2, 9, 8]

Sorted list: [0, 0, 2, 2, 5, 7, 7, 8, 8, 9]

Unsorted list: [2, 5, 9, 5, 2, 6, 9, 4, 9, 5]

Sorted list: [2, 2, 4, 5, 5, 5, 6, 9, 9, 9]

Unsorted list: [8, 8, 8, 7, 9, 4, 7, 0, 5, 5]

Sorted list: [0, 4, 5, 5, 7, 7, 8, 8, 8, 9]

Unsorted list: [1, 6, 9, 4, 0, 9, 7, 4, 9, 9]

Sorted list: [0, 1, 4, 4, 6, 7, 9, 9, 9, 9]

Unsorted list: [3, 0, 9, 7, 2, 8, 9, 6, 2, 3]

Sorted list: [0, 2, 2, 3, 3, 6, 7, 8, 9, 9]

Unsorted list: [0, 9, 1, 3, 0, 7, 5, 6, 2, 6]

Sorted list: [0, 0, 1, 2, 3, 5, 6, 6, 7, 9]

Unsorted list: [3, 6, 8, 9, 7, 0, 2, 8, 3, 8]

Sorted list: [0, 2, 3, 3, 6, 7, 8, 8, 8, 9]

Unsorted list: [5, 7, 8, 6, 5, 2, 7, 8, 5, 8]

Sorted list: [2, 5, 5, 5, 6, 7, 7, 8, 8, 8]

Unsorted list: [5, 4, 9, 7, 3, 3, 4, 8, 4, 3]

Sorted list: [3, 3, 3, 4, 4, 4, 5, 7, 8, 9]

Unsorted list: [4, 4, 3, 7, 5, 7, 5, 8, 4, 4]

Sorted list: [3, 4, 4, 4, 4, 5, 5, 7, 7, 8]

Unsorted list: [1, 9, 8, 6, 6, 5, 2, 4, 0, 4]

Sorted list: [0, 1, 2, 4, 4, 5, 6, 6, 8, 9]

Unsorted list: [1, 5, 7, 4, 7, 3, 3, 8, 4, 8]

Sorted list: [1, 3, 3, 4, 4, 5, 7, 7, 8, 8]

Unsorted list: [4, 2, 1, 9, 9, 3, 3, 0, 8, 3]

Sorted list: [0, 1, 2, 3, 3, 3, 4, 8, 9, 9]

Unsorted list: [3, 0, 1, 6, 5, 7, 1, 2, 0, 8]

Sorted list: [0, 0, 1, 1, 2, 3, 5, 6, 7, 8]

Unsorted list: [2, 6, 7, 7, 3, 4, 5, 4, 0, 1]

Sorted list: [0, 1, 2, 3, 4, 4, 5, 6, 7, 7]

Unsorted list: [9, 3, 8, 0, 2, 6, 2, 0, 6, 7]

Sorted list: [0, 0, 2, 2, 3, 6, 6, 7, 8, 9]

Unsorted list: [2, 4, 0, 0, 4, 9, 9, 1, 5, 4]

Sorted list: [0, 0, 1, 2, 4, 4, 4, 5, 9, 9]

Unsorted list: [7, 8, 8, 7, 2, 8, 7, 4, 3, 1]

Sorted list: [1, 2, 3, 4, 7, 7, 7, 8, 8, 8]

Unsorted list: [5, 2, 7, 4, 2, 0, 5, 4, 9, 3]

Sorted list: [0, 2, 2, 3, 4, 4, 5, 5, 7, 9]

Unsorted list: [2, 9, 6, 6, 8, 5, 1, 6, 1, 2]

Sorted list: [1, 1, 2, 2, 5, 6, 6, 6, 8, 9]

Unsorted list: {unsorted-list}

Sorted list:

code prompt with proper formatting (3-shot)

The sort function can be used to sort a list in ascending, descending or user defined order.

To sort the list in ascending order, simply call list.sort(). This will sort a list of integers in ascending order so that the smallest integer will be first in the list and the largest integer will be the last.

For example:

list = [8, 0, 1, 3, 2]

list.sort() = [0, 1, 2, 3, 8]

list = [6, 7, 7, 3, 6]

list.sort() = [3, 6, 6, 7, 7]

list = [0, 2, 6, 0, 6]

list.sort() = [0, 0, 2, 6, 6]

list = {unsorted-list}

list.sort() =

What exactly this means is a topic worthy of extensive investigation, and is touched on somewhat in Methods of prompt programming. ↩︎

It would be interesting to see what the attention heads are looking at as the number of examples increases. ↩︎

It’s imaginable that “list sorting as triggered by the coding prompt” and “list sorting as triggered by

Unsorted: ...\nSorted:” are also implemented in internally different ways. ↩︎