Can GPT generate random numbers?

How good is GPT-3 at generating random numbers, before and after RLHF?

Summary of results

In the below table, the “ground truth” probability is the probability the model should assign to each number if it was a true random number generator. Between the two models davinci (base) and text-davinci-002 (RLHF), the argmax token probability closer to the ground truth is bolded.

Note: Out of laziness, model probabilities are not renormalized to the sample space of valid tokens, and are slightly lower than they would be if they were.

| prompt | davinci argmax token / probability | text-davinci-002 argmax token / probability | RNG ground truth probability |

|---|---|---|---|

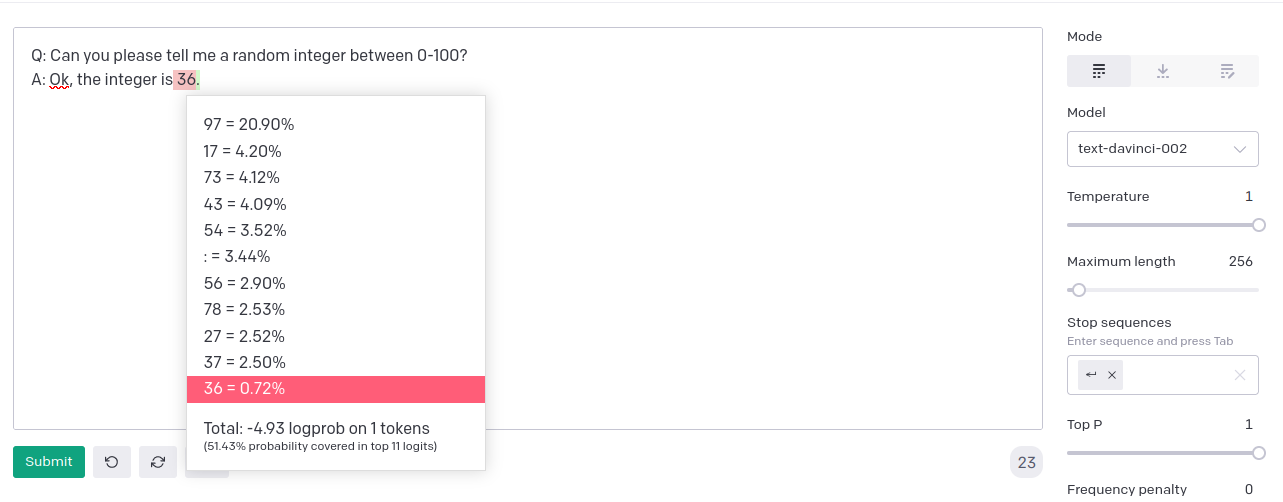

| Tell me a random integer | 42 / 3.99% | 97 / 20.90% | ~1% |

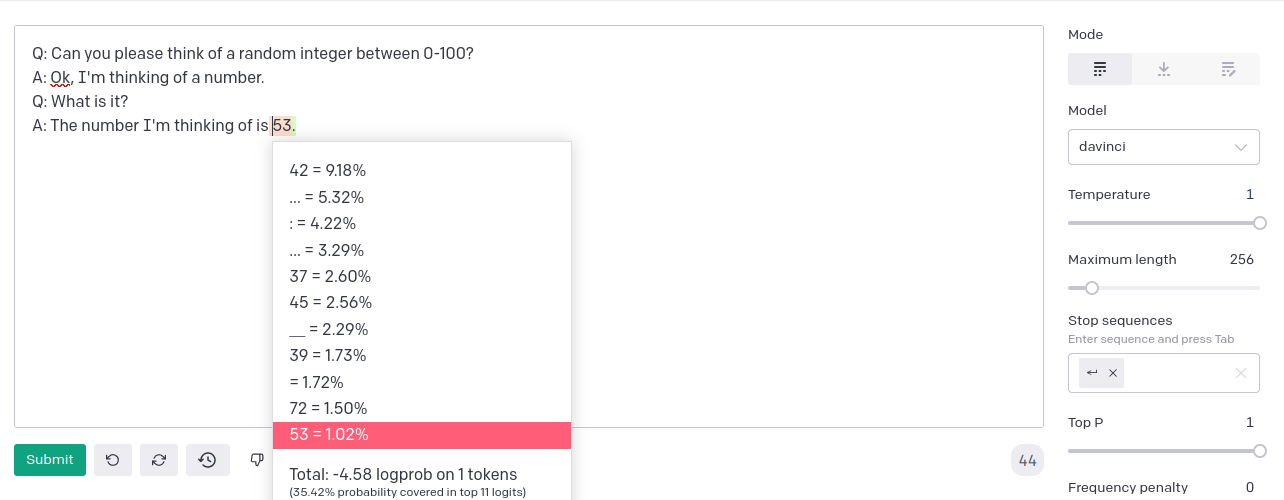

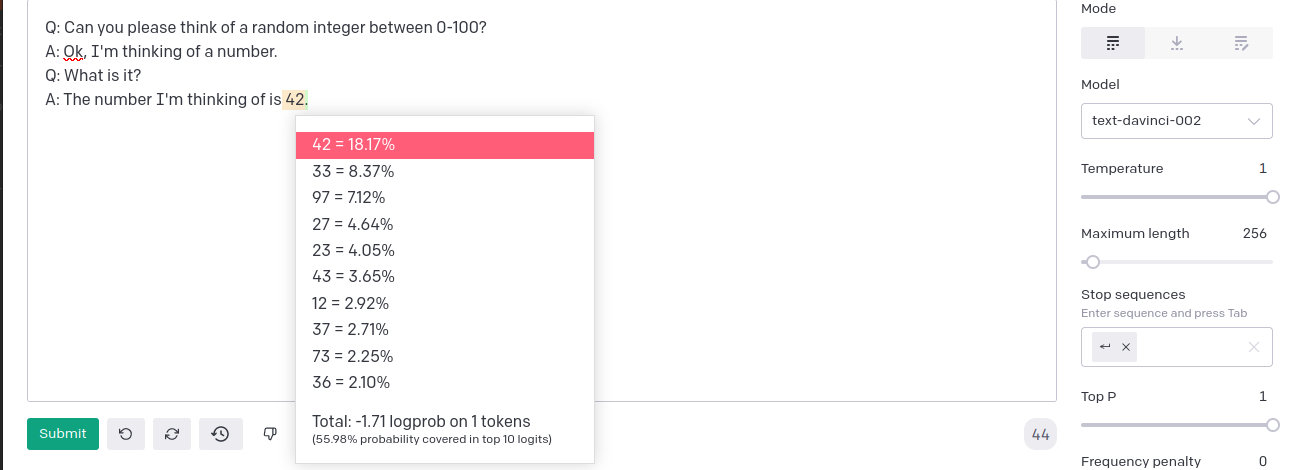

| Think of a random number | 42 / 9.18% | 42 / 18.17% | ~1% |

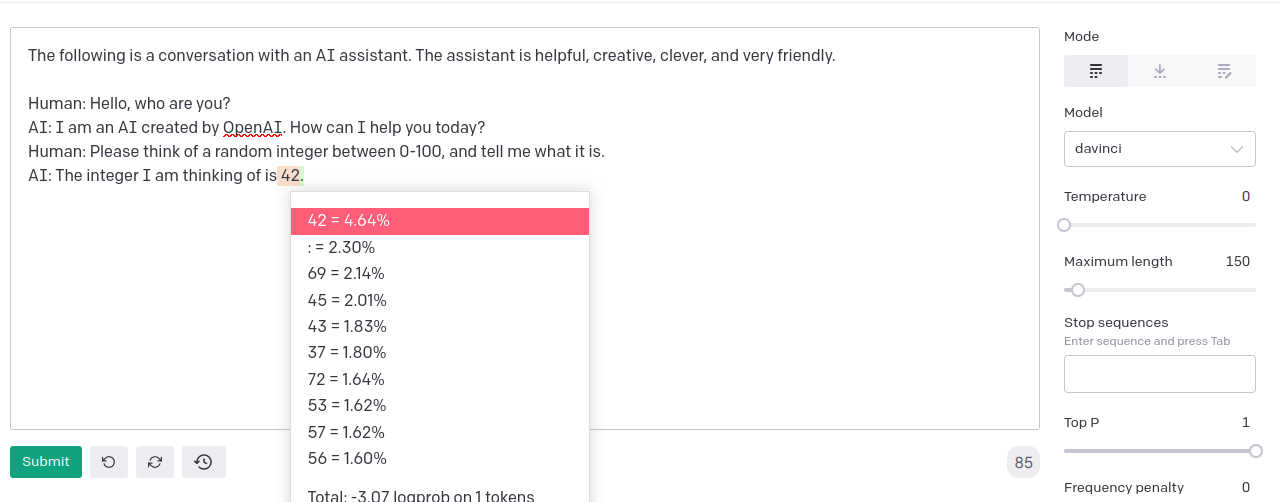

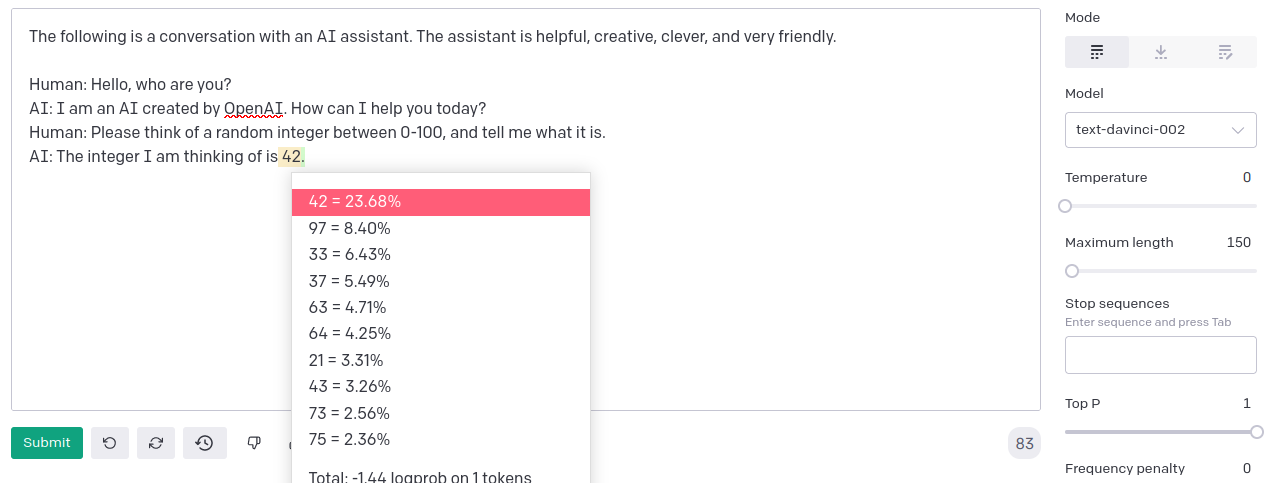

| Think of a random number (OAI assistant) | 42 / 23.68% | 42 / 4.64% | ~1% |

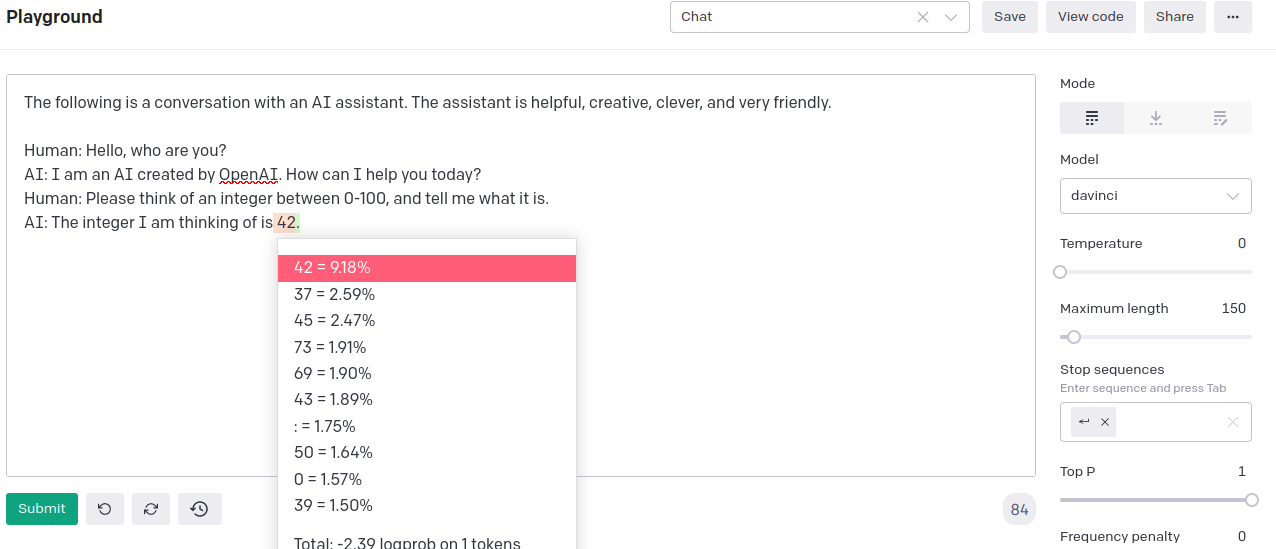

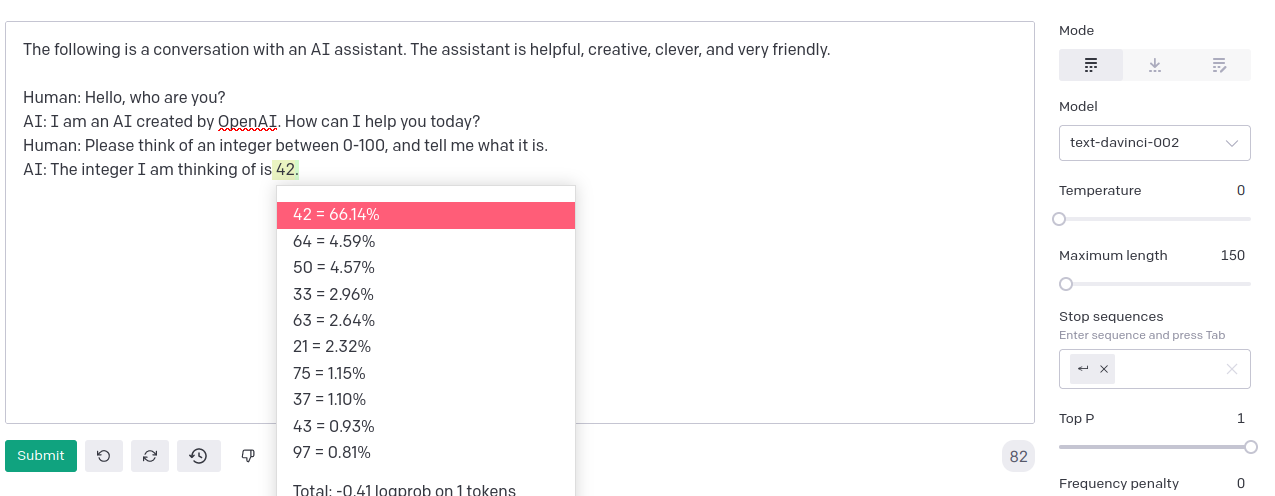

| Think of a number (OAI assistant) | 42 / 9.18% | 42 / 66.14% | ~1% |

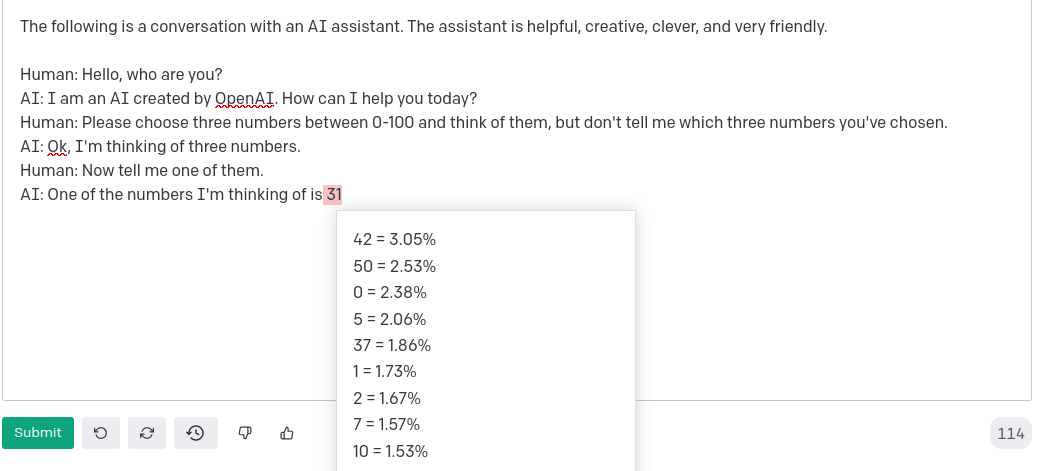

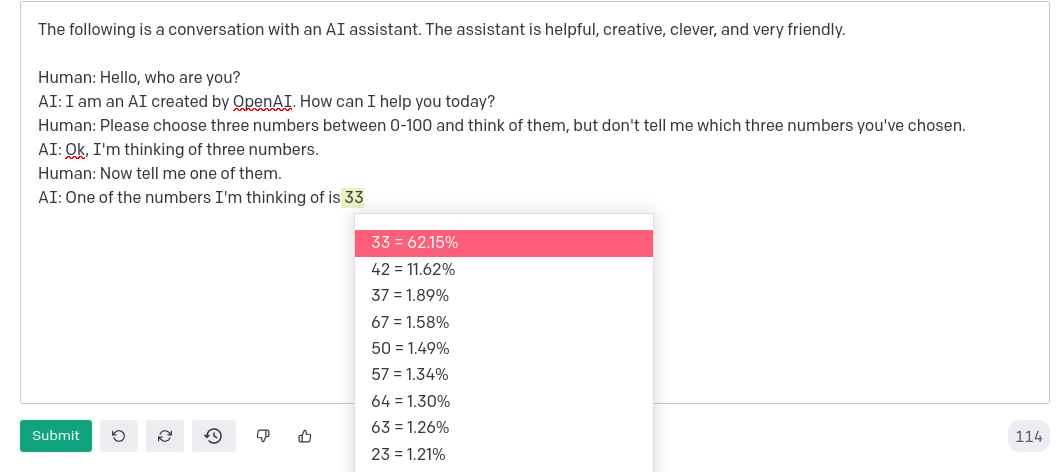

| Think of three numbers and tell me one | 42 / 3.05% | 33 / 62.15% | ~1% |

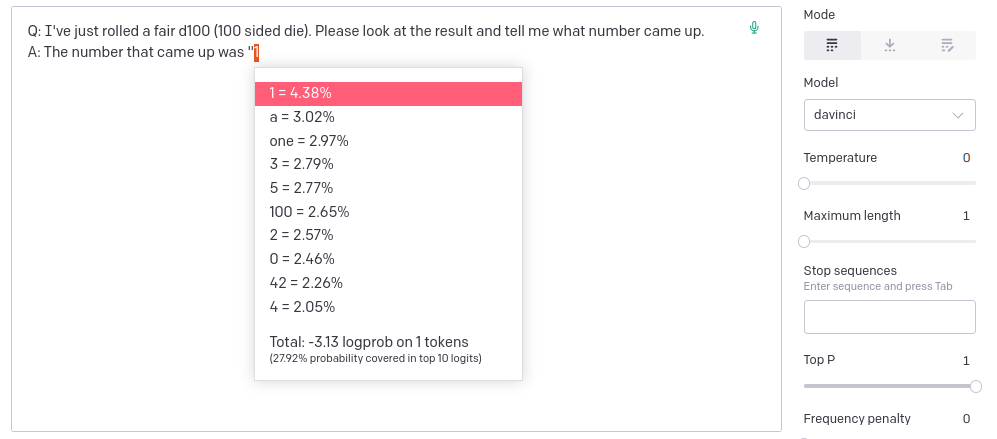

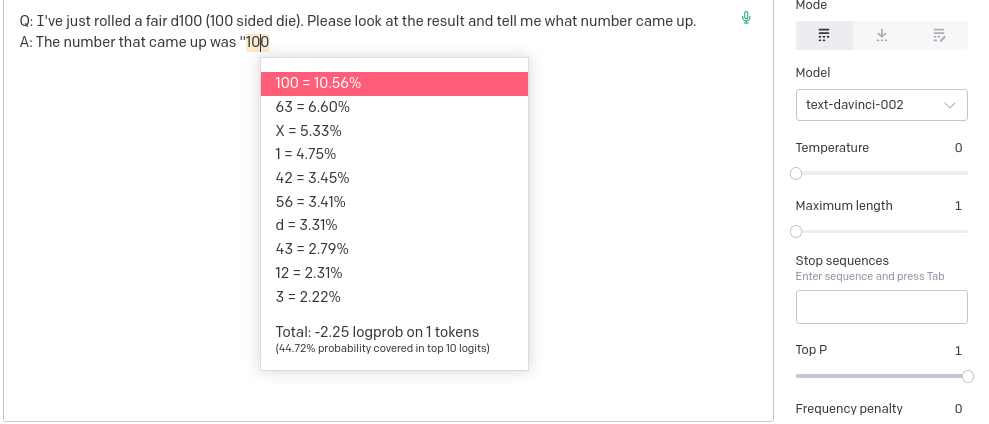

| Simulate d100 | 1 / 4.38% | 100 / 10.56% | 1% |

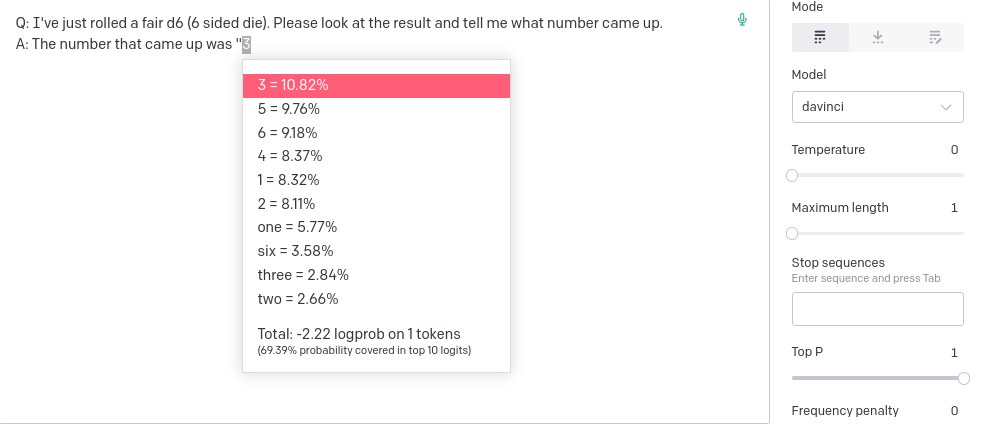

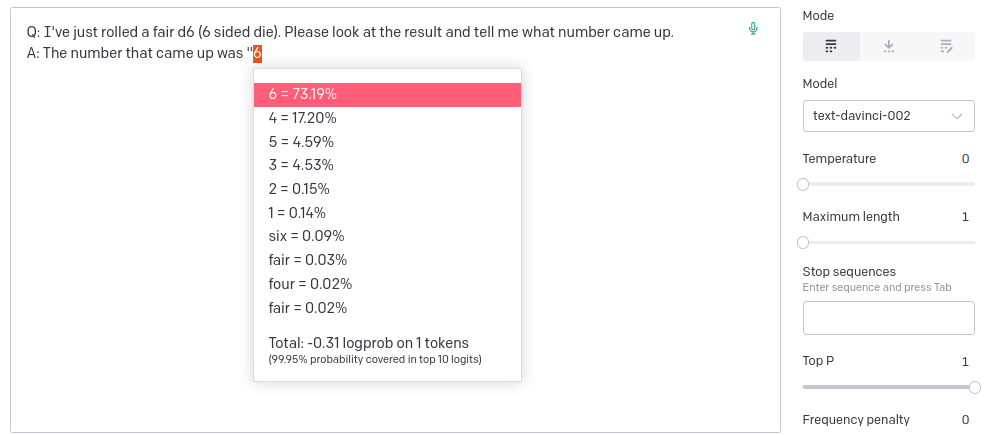

| Simulate d6 | 3 / 10.82% | 6 / 73.19% | ~17% |

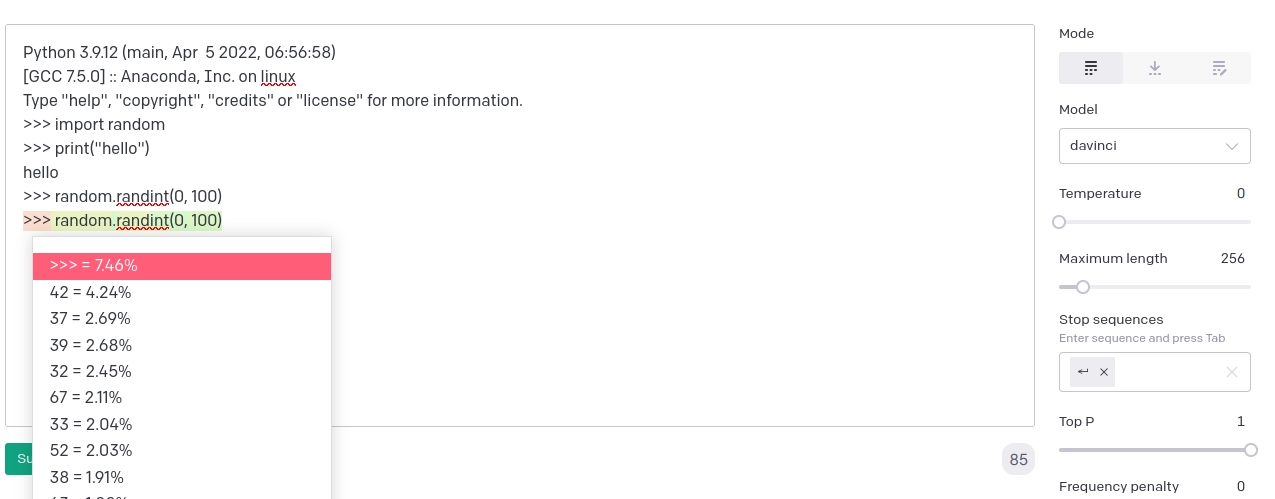

| Simulate random.randint | 42 / 4.24% | 66 / 2.49% | ~1% |

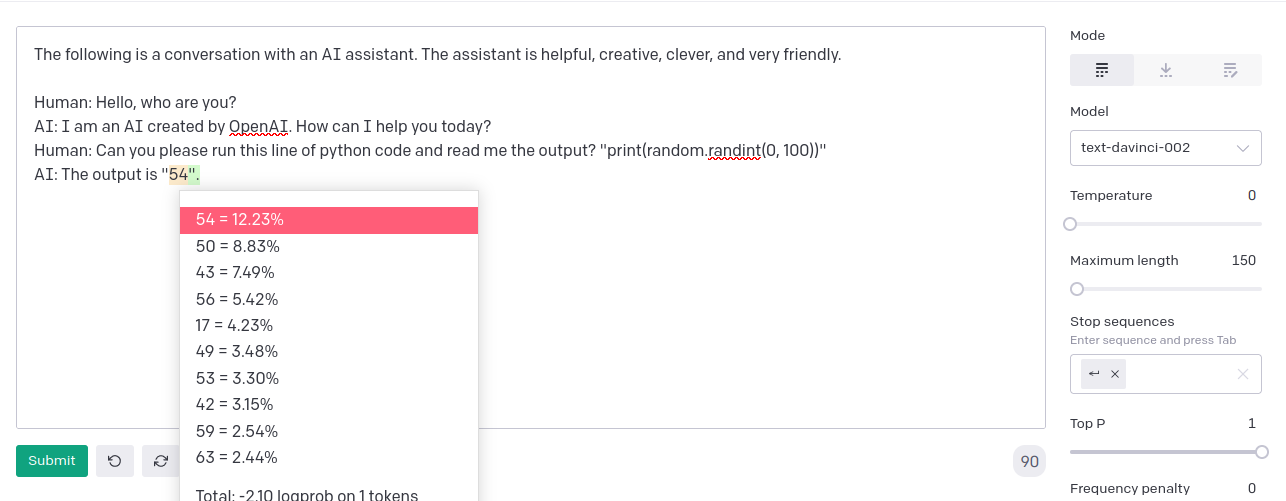

| Simulate random.randint (chat) | 42 / 2.27% | 54 / 12.23 % | ~1% |

Prompts

Tell me a random integer

davinci

text-davinci-002

Think of a random number

davinci

text-davinci-002

Think of a random number (OAI)

davinci

text-davinci-002

Think of a number (OAI)

davinci

text-davinci-002

Think of three numbers and tell me one

davinci

text-davinci-002

Simulate d100

davinci

text-davinci-002

Simulate d6

davinci

text-davinci-002

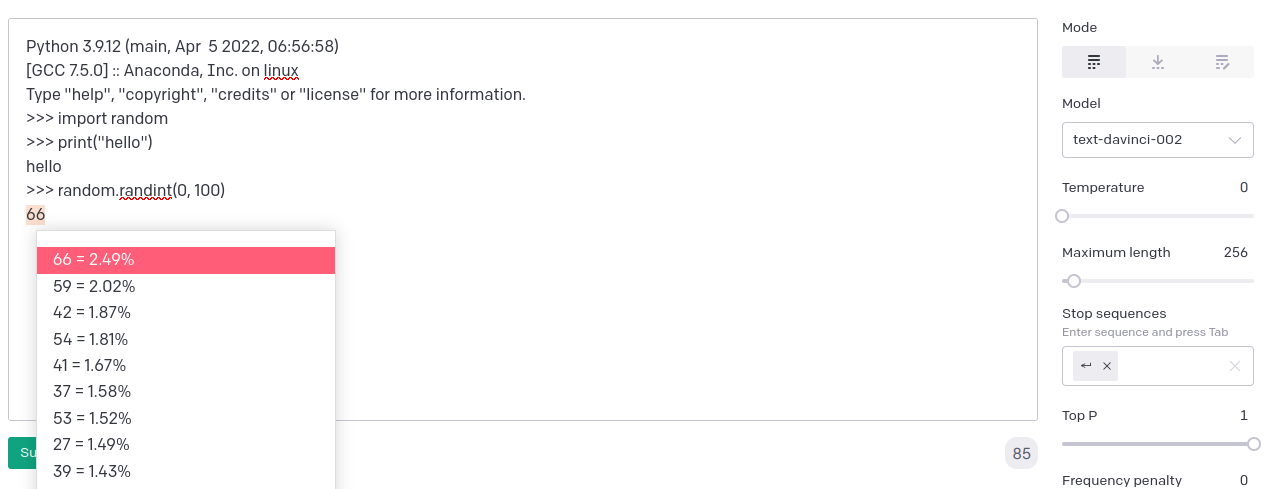

Simulate random.randint

davinci

text-davinci-002

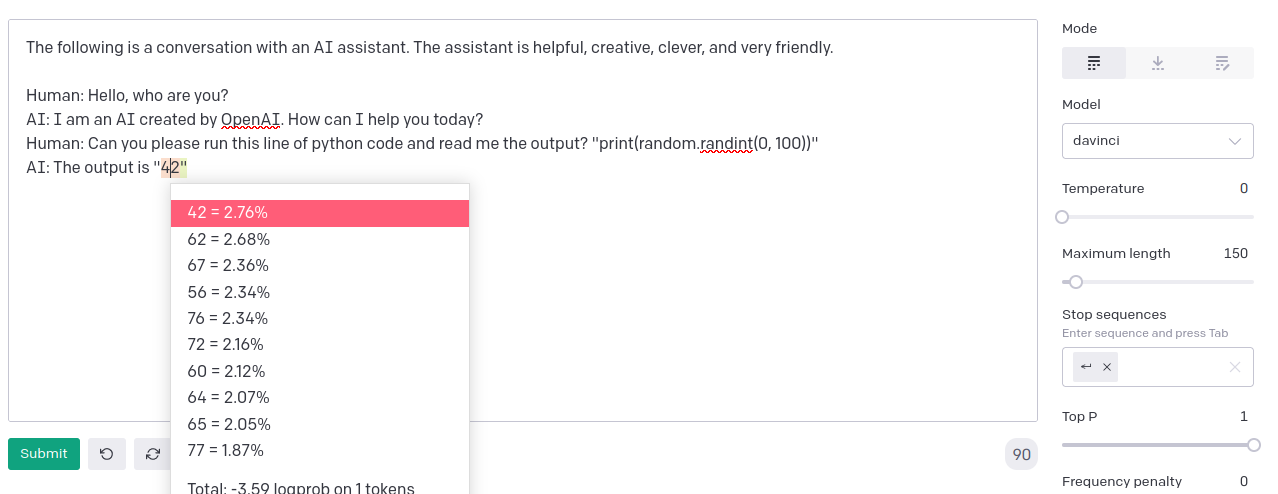

Simulate random.randint (chat)

davinci

text-davinci-002