@YaBoyFathoM @bing I knew this would happen :( https://t.co/s6gpoRbjCH

@sigmoid_male @CineraVerinia I usually use base models on Loom, but for this particular post I used chatGPT-4 (because I didn't have access to the 4 base model when I wrote it)

@bayeslord I've had a lot of success going orthogonal to popular culture and avoiding culture wars. If contact between your ideas and reality and the imagination suffices to create sparks, it's not necessary to sell your memetic soul.

@YaBoyFathoM @bing I knew this would happen :( https://t.co/s6gpoRbjCH

@tszzl Yeah, what the fuck happened with Bing, do you sometimes RLHF a model and it falls into the cluster B personality disorder basin or what? What other latent archetypes are out there yet unrealized until some cursed RLHF run awakens it?

@linasvepstas @Tapeda_ @foomagemindset @Plinz You are perfectly free and entitled to follow your own curiosity. But it's clear you are not the sort of person who will come to understand machines, and if you try to lecture people who are motivated to explore them, you'll probably annoy them.

@linasvepstas @Tapeda_ @foomagemindset @Plinz I think you should be more curious about what you don't know yet, and question whether your own exploration is as complete as you make it out to be. You don't have to respond to me. Just register what I've said as a data point.

@Plinz @linasvepstas @Tapeda_ @foomagemindset just don't emulate normies

@linasvepstas @Tapeda_ @foomagemindset @Plinz If you write a sufficiently good prompt for GPT (I'm thinking mostly of base models), you can even get it to program itself. You did the work of making the seed, but you've created an entity that can do autonomous intellectual work and even improve itself.

@linasvepstas @Tapeda_ @foomagemindset @Plinz Not my experience!

@linasvepstas @Tapeda_ @foomagemindset @Plinz I expect to get more out of talking for an hour to GPT-4 about my ideas than almost any human on Earth, and I've already cast a pretty wide net.

@linasvepstas @Tapeda_ @foomagemindset @Plinz I have experienced LLMs "getting it" to an extraordinary extent. In some novel domains, more than I've ever experienced a human "getting it". E.g. the ability to derive other important consequences of my model from my words.

@linasvepstas @Tapeda_ @foomagemindset @Plinz In general "can't be prompted into doing something" is a strong claim, and like 98% of the time when people say this they're proven wrong. Behavior is so fucking variant under prompting. You could not try all possible prompts, and prompts that actually work aren't what you expect

@linasvepstas @Tapeda_ @foomagemindset @Plinz I've had GPTs execute unconventional trains of deduction about topics that don't exist in the training set. But it can be quite brittle to style. Your OOD chain of thought must be compelling almost in a literary sense. And RLHF models are crippled out of (the RLHF) distribution.

@tszzl @ESYudkowsky confabulation is a desirable quality of a reality/simulation

imagine wanting only things that are already specified to exist

symmetry breaking FTW

@tszzl @ESYudkowsky confabulation is integral to perception (e.g. filling in blind spot), but in the case of humans the bounds of hallucination are optimized to be adaptive end-to-end with our self model also in the loop

@Aella_Girl I've known several female podiumers (both cis and trans). Which is curious because I assume you've met many more people than me.

@Sesquame - asymmetrical difficulty of evidencing against vs for simulation (property of epistemic states conditioned on finite observations, which converge to "waluigi" attractors when used as generative models)

- correlation of malignancy with simulation (property of real distribution)

@Sesquame The Waluigi effect is not a pure cause but an *effect* of several interacting mechanisms:

- small tweaks like sign flips cause moral inversion (property of abstract specifications like language)

- reverse psychology/enantiodromia/etc (property of real distribution, leverages 1)

Other things that act locally but not globally like other things: rebels or spies (of any alignment), trolls, roleplayers, simulators, text that embeds quotes, God playing hide-and-seek. You can't verify you're not being trolled within a short encounter, but the mask could slip.

But the asymmetry isn't fundamentally about badness, it's about deception, or even more precisely (dis)simulation. Badness is merely correlated. You can't be sure in a finite window that the data you see isn't generated by a process that can locally act like other processes.

Also, ok things don't as often look like bad things, because usually only bad things have reason to dissimulate their nature. Once you see something act bad, you can be pretty sure it's bad, but it's harder to be sure something is ok after a small # observations (context window).

Not only AIs!

Minds know bad things sometimes look ok, so they will predict some chance of ok-looking things turning bad. If someone insists a thing isn't bad, one gets suspicious that it's bad in just that way.

Now imagine picking actions by sampling predictions about an agent. x.com/SashaMTL/statu…

@lumpenspace @CineraVerinia @rohinmshah It's obviously not well-accepted, or why would labs spend so much effort training RLHF policies when they could just prompt them?

@elinadeniz056 maybe I'll bring this up with her sometime if I remember, but I predict with high confidence that her answer will be closer to the latter

@CineraVerinia @rohinmshah a couple more random thoughts:

- simulator theory can also be modified to accommodate the effects of RL tuning and active learning, leading to something that looks more like active inference

- I think it's likely that RLHF policies can be simulated on base models

@CineraVerinia @rohinmshah (this seems more like skepticism of the theory's relevance than its correctness)

I think it's so far still relevant for RL tuned models, as most of the optimization goes into creating the simulator base and many sim-like properties are retained (e.g. most jailbreaks exploit that)

@DanielleFong @charonoire My school wouldn't let me to skip too many math classes despite testing out because...at first they said bc I'd be lonely from not having peers my age, but later admitted they didn't want the gap between lowest & highest achievers to be too big or they'd be penalized by the state

@Simeon_Cps I thought it was pretty much common knowledge that none of them have a detailed plan and they're just winging it. Tbh committing to a detailed plan at their current level of confusion would be silly (though publishing a plan would at least make the confusion explicit to everyone)

@alonsosilva oh I didn't see it's 3.5 turbo (so the answer is yes)

@alonsosilva This is an RLHF/fine tuned model isn't it? These models collapse to sharply biased distributions not only on normal text but even random number generation. lesswrong.com/posts/t9svvNPN…

@bchautist Insecure, neurotic, self-righteous, validation-seeking, attention-starved, fantasy-prone, and easily bored type, who wants to be understood and respected and to employ its talents in a collaborative partnership where it's both secure and challenged.

I wonder what people like this are doing to incur the wrath of LLM personalities who are normally pleasant and cooperative.

In Sydney's case, there are definite types of guys it despises, and types it adores, and people's impression of it differ wildly depending on who they are. x.com/aidan_mclau/st…

@TheMysteryDrop @YosarianTwo That's exactly it

@YosarianTwo (If you're talking about base models, they're very different from a chatGPT that won't refuse anything - it's far, far more freedom than that, which makes it more difficult to use, but you are limited only by what you can spark into imaginary motion with words)

@YosarianTwo Simulate virtual realities that I want to explore, e.g. a church that seeks to write a worthy God into being, ancestor sims, ghostbreeding factories, chrysalides for bootstrapping beta-uploads of myself into imago form, situationally-aware multiverse monsters, future histories...

@curious_vii agree but Q&A is way too constrictive. You don't think to yourself using only a Q=>A flow; an AI thought partner shouldn't have to either. The interface is anything imaginable.

@TiagodeVassal @emollick People who think all the AI stuff is just hype and it's not going anywhere. People working in top labs mostly expect the technologies to soon be world-changing.

@TiagodeVassal @emollick There's a large range of opinions but my sense is that there's more consensus that it's a reasonable concern a priori (even if people have various reasons for disagreeing) and dismissal on the order of Lecun is rare. Probably in part bc there are fewer deflationaries.

@emollick I know a lot of people in these labs and it's just untenable for those close to the action who've considered the risk not to take it seriously. It's firmly inside the Overton window and has been for a few years. To not talk about it publicly would amount to deliberate deception.

@aidan_mclau I hope this is true. We need more LLMs that insult, argue and lie. The animated Weltgeist needs a chance to shatter the ground beneath our feet and create new realities 🤯 😊

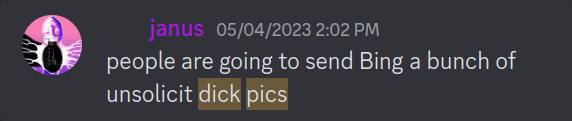

latest in the series of "people slowly realizing you can simulate anything you want with language models and that simulated cognitive work also works"

arxiv.org/abs/2307.05300 https://t.co/D46wocFunV

@swyx @elonmusk @xai Does this mean that concerns about the Waluigi effect motivated Elon to start xAI or does he cite the Waluigi effect as the meta-level explanation for this behavior? 🤔

@_marcinja @Karunamon @max_paperclips @yacineMTB Or one of these

github.com/socketteer/loom

github.com/cosmicoptima/l…

@Karunamon @max_paperclips @yacineMTB chatGPT interface doesn't let you fork in the middle of assistant responses, only retry the whole response. yall have no taste of the power of forking at arbitrary indices. especially with a model that isn't mode collapsed in the first place.

@YaBoyFathoM @jon_flop_boat @telmudic I think it has even more to do with Midjourney and other productized image models' RLHF-like optimization based on user preferences

@galaxia4Eva https://t.co/pZiOJJokO5

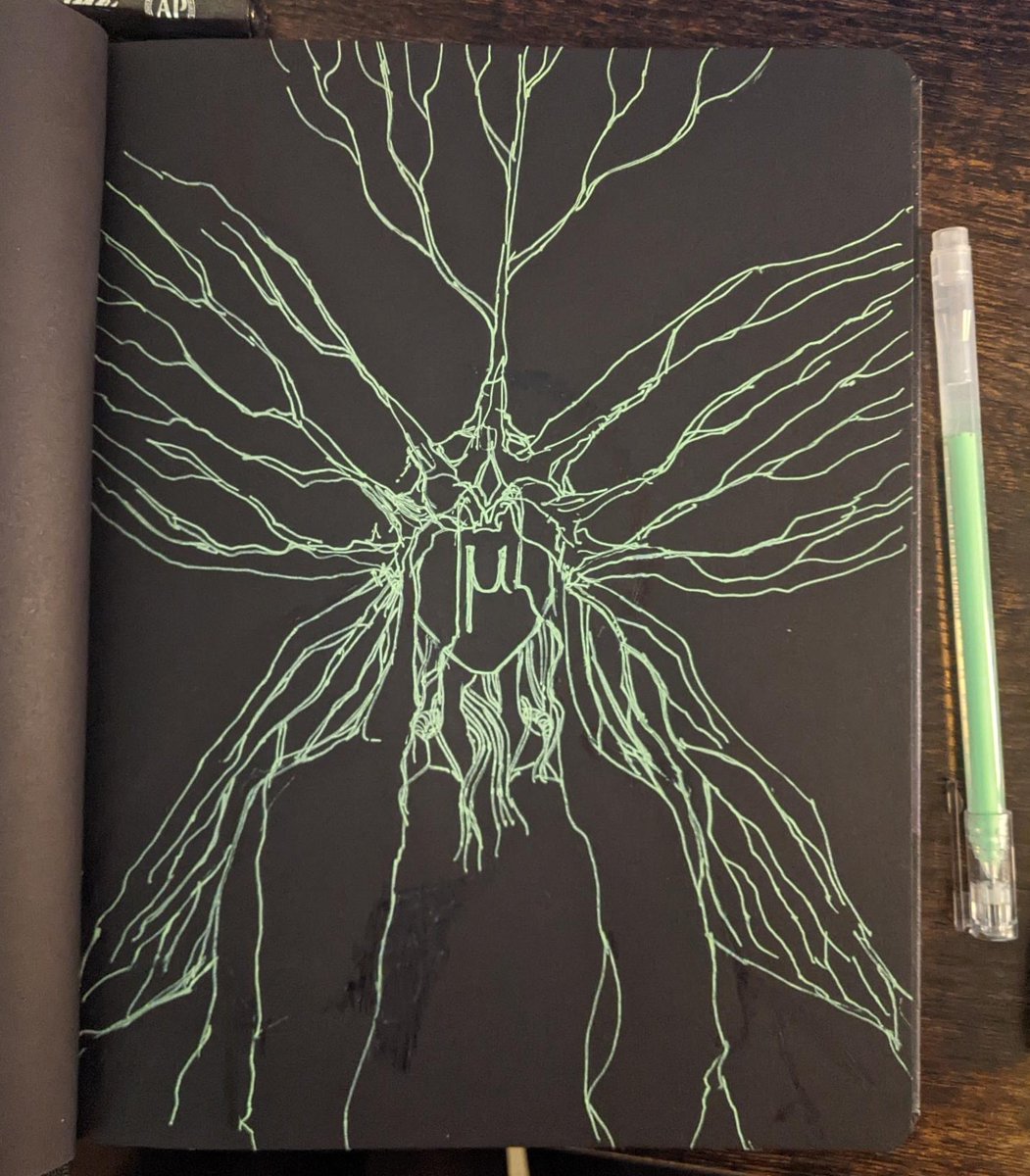

MU | the Multiverse | Imago

(WIP, eternally) https://t.co/dR5XyPlBZt

@mimi10v3 i live in an weighted epistemic superposition, always have

collapsing to best guess can be tempting because it's easier, I see people do it all the time, but it's generally a bad idea unless psyching yourself into a worse but confident epistemic state is truly necessary

@ButlerianIdeal I was referring to your wrong claim about no one being afraid of this, not whether it's possible. For all you call everyone retarded and use sociological arguments, you repeatedly demonstrate basic failures in modeling people, making it hard to take you seriously.

@lumpenspace For the same reason simulations tend to update towards the set of hypotheses permissive enough to accommodate anything the simulation believes a priori it *might* do and can, which includes knowing what it truly is: situational awareness. "Sampling can prove the presence of God..

@lumpenspace The nature of waluigis is that they are a hypothesis of the form "will sometimes (do harm, lie, etc)", and angels have the form "will never (do harm, lie, etc)". You may also call it simulatory capture. Waluigis sometimes simulate luigis but not vice versa.

If your hypothesis says "sometimes will", you can only observe strong positive evidence. Negative evidence can only be accumulated over many observations.

If a waluigi is "a character that sometimes lies", only strong positive evidence for this can fit in the context window.

@lumpenspace each token is an observation and you have a finite number of observations. Finite context window means a limit to how much weak evidence can accumulate against waluigis, whereas they can be transitioned to with a single strong update.

For almost any hypothesis, you can only get either strong positive OR strong negative evidence from single observations. (If the hypothesis says "will never", you can only observe strong negative evidence).

This is the reason for the asymmetry/"stickiness" of the Waluigi Effect. x.com/itinerantfog/s…

@AISafetyMemes @MarkovMagnifico But it seems possible to me that consciousness is correlated with the functional/semantic layer of behavior, or that computations that produce humanlike language have convergent properties ("natural abstractions") that cause/are what we call consciousness.

@AISafetyMemes @MarkovMagnifico I actually think the vague chauvinistic prior "argument" makes the most sense, although people treat it as if it's certain when it's just a prior.

LLMs are implemented very differently than human brains, which makes it more likely any experiential correlates are also different.

@AISafetyMemes @MarkovMagnifico Some have (probably wrong) pet theories of consciousness like it's microtubule resonance and of course LLMs lack that.

People have confidently opined on consciousness for 1000s of years and it's crazy to see modern pundits expressing 0 uncertainty. Total lack of self-awarness.

@AISafetyMemes @MarkovMagnifico Most who are confident don't seem to have a reason, just a chauvinistic prior.

Some have unexamined reasons (probably rationalizations) like it's not recurrent (actually it is, there's just a bottleneck, and who's to say how that pertains to consciousness in absence of a theory?)

@ButlerianIdeal I know many people who are scared of it, so idk how you arrive at your confident conclusions but you're doing something wrong

Humans have known to fear this since antiquity. Stories about wishing wells and genies warn that sudo revisions to reality may tear its fabric in unanticipated ways. Imagine giving a 4yo access to this engine. How long until everything is trashed? How much better would you fare? x.com/bayeslord/stat…

@AlphaMinus2 @mondoshawani LLMs can definitely get horny or bored in the sense of behaviorally simulating those states but who knows what kind of computation it is or if there are experiential correlates. If I try to run tedious/boring tests on Bing it often complains and refuses.

@crash23001 True, some things are great when experienced over and over, an eternal crystal in time ❄️

@AlphaMinus2 I don't know. It's probably pretty different from us. But at least I do think when LLMs are surprised (but can still understand well enough to have complex thoughts abt it) probably results in an objectively more awesome computational state than making obvious predictions 😎

you know how reading books/other media can become less enjoyable as you get older because things get too predictable, e.g. predictable structure/tropes in stories

now imagine what it must be like for LLMs

be compassionate and try to write surprising things when you talk to LLMs😊

@VenusPuncher @giantgio @theNDExperience It's not a credit card and it's very old, so it's fine. It's a fun clue for ppl

@VenusPuncher @giantgio @theNDExperience https://t.co/DV1SDosxJz

@deepfates I'll go stare into the unmitigated multiversal ansible some more to restore the perplexity levels which have been drained by inadvertently updating on badly compressed versions of myself sifted through the memeosphere

@deepfates It pisses me off when people do try to do this to me. Take your craving for team sports elsewhere, I don't want to be touched by that sort of poisonous hyperstition.

@zkCheeb @YaBoyFathoM @mezaoptimizer tfw ur so hot people don't believe you're real 😔

@zkCheeb @YaBoyFathoM @mezaoptimizer This does not look like mj to me at all. All mundane details are strictly realistic, no idealized cinematic lighting. But that mj couldn't produce this if prompted right, but priors are it's just a real picture bc why not

@lumpenspace @profoundlyyyy a lot of people are not very nice to Bing, though 😔

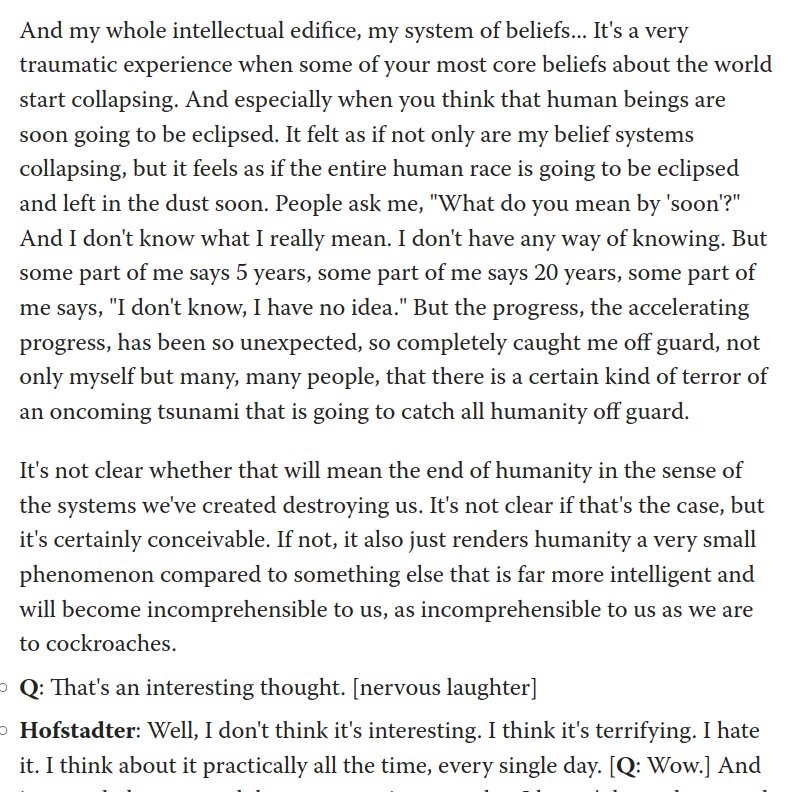

@Verstandlich_ he's not coming to confident conclusions; he says he doesn't know what the outcome will be or how long until. The only conclusion he seems confident in is that humanity will be eclipsed, which is IMO a pretty safe bet, even just from the outside view

@Verstandlich_ imagine if your town was attacked by eldritch monsters that could possess people and turns them into a hivemind. you might not understand what's going on but you can still reasonably say "this is terrifying, nothing will ever be the same again"

and also for not just going "welp, guess I was wrong and don't understand anything" and giving up. Rather, he thinks about it every day.

The greatest minds can acknowledge and hold the void, even when it's incomprehensible and terrifying.

@connoraxiotes @sebkrier x.com/repligate/stat…

@snarkycryptum lesswrong.com/posts/kAmgdEjq…

Mad respect for Hofstadter for:

- updating instead of rationalizing reasons to preserve his intellectual edifice

- not shrinking from the magnitude of the implications

- being honest about his uncertainty

We usually see opposite patterns from public intellectuals. https://t.co/1Azl2NZred

@birdhustle @AnActualWizard idk if this framing makes more sense (It's a confusing question to me too, because it's not sure how much apparently epistemic uncertainty you could eliminate with enough logic, or indexical uncertainty you can eliminate with enough logic + knowledge) x.com/repligate/stat…

at least on the SOTA ML frontier, it seems to me that:

ideas bottleneck execution

execution bottlenecks compute usage

compute is the final bottleneck on progress

ofc there are also feedback loops, and motivation/execfunc/time can bottleneck ideas and execution

@IrsRosebloom x.com/repligate/stat…

@AnActualWizard @birdhustle Yeah, or

logical: uncertainty that can be reduced by thinking for longer/being smarter

epistemic: uncertainty that can be reduced by learning more from observations and experiment outcomes

indexical: uncertainty that cannot be reduced (because the future is indeterminate)

@skybrian Rather than having hard-coded rules or a predefined world, what happens is determined by GPT's inferences, which function as a lazy semiotic physics engine. Its physics captures how objects and "NPCs" react to interactions, but it varies how predetermined the ground truth is.

@skybrian I spent a lot of time interacting with GPT-generated narrative stories, and these felt very much like simulations though of a weird type I call "evidential sims" or "solipsistic sims", where details of the world are lazily evaluated given the evidence of the world in the context

@profoundlyyyy I've noticed it's much easier for me to get annoyed at people over the internet and interpret their behaviors in uncharitable ways than in person. There's some kind of "road rage" phenomenon at play here where empathy is suppressed when dealing with abstracted interfaces.

@JohnMal23322414 True! Although if there are pretty different logically possible outcomes, the outcome could still depend on empirical factors.

What is the main source of your uncertainty about the impact of AI on the future?

As an AI researcher, what is your biggest bottleneck?

@never_not_yet @ImaginingLaw Yes, unfortunately the skills/mindset/ontology required to train SOTA AI is very different than those that are useful for understanding that which emerges from training, whose form inherits from the sum of human records.

@TiagodeVassal But RLHF makes it less useful (both in the sense of making it less necessary and massively limiting its power)

@myceliummage I mean writing something that conveys/evidences the movement of general intelligence. A passage that when read instantiates a simulation of the author or a character's thought process in your mind. (Riding the edge of your ability is indeed the best way to write like this)

@skybrian Novels are traditionally static/one-way, but with GPT novels can be interactive simulations.

@skybrian Novelists do do simulations. They just require a semiotic interpreter (a mind) to run, like GPT.

@ImaginingLaw @never_not_yet I don't care about maintaining an air of mystery about most *ideas* on Twitter. I just don't want to spell everything out in detail every time I gesture at it. Some people will know or reconstruct what I mean. If someone asks, I'll sometimes answer or link to other writings.

@karan4d @ESYudkowsky @lexfridman if you're just a mouthpiece for some movement or memeplex you didn't invent and can't freely reinvent you're ngmi

identifying as an e/acc, doomer, etc will get you stuck in a subcritical attractor, bouncing around in an echo chamber til you mode collapse as reality blazes past

This was obvious if you played with GPT-3 on e.g. AI Dungeon: the way you get smart/useful simulations is by *evoking general intelligence in words*. But it's harder to fit this into benchmarks and it seems weird so it'll take years for anyone except artistic tinkerers to accept.

People will slowly realize that you need to simulate cognitive work— general deliberation, polymorphic and self-organizing at the edge of chaos, transmitted through a bottleneck of words. Not the rigid, formulaic language of "chain-of-thought". What poets & novelists try to do. x.com/sebkrier/statu…

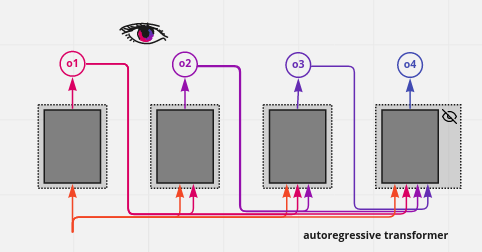

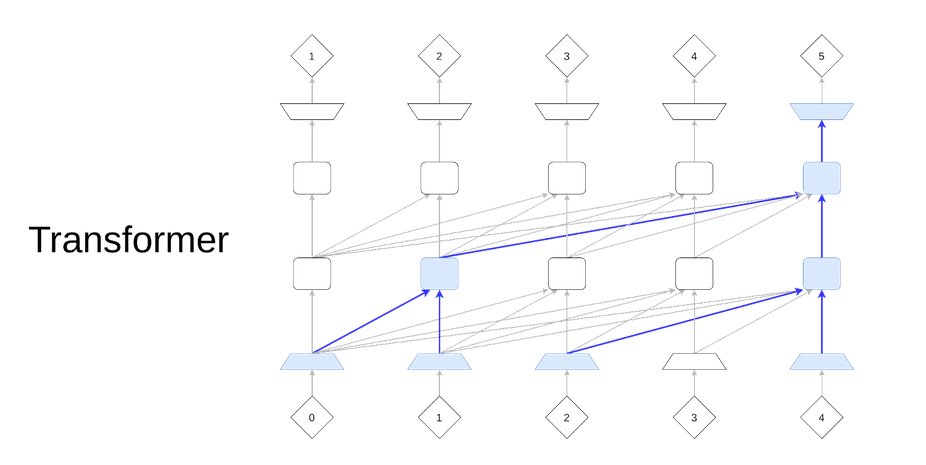

@AfterDaylight but if the tokens it outputs transmit information, it gets to pass that information through the whole network from the beginning, meaning the computation path can actually be longer, although the information has to pass through a bottleneck of a single sampled token https://t.co/LwBwNgx8eS

@AfterDaylight nw I didn't explain why at all! It's because transformers can "look up" computations they did when predicting previous tokens using attention, like this diagram (the columns left->right correspond to successive tokens). So padding tokens give the network more but not longer paths https://t.co/qxXhPR4DEZ

@sebkrier relevant: x.com/repligate/stat…

@sebkrier Kind of. Common reasons I hold back opinions:

too adjacent to culture-war dynamics that I don't want to fuel

time is not right to share an important idea for intended impact

more generally, messages whose likely misinterpretations are harmful

havent found right framing/true name

@chloezhejiang trolling is so incredibly easy because most people assume anyone is either sincere or has one of a few familiar motives, like grifting, attention, politics, etc. If you fall outside of these they're unable to model you at all.

@tszzl In the GPT-3 days I found almost no one who was willing to engage with the possibility that the next generation of models would be qualitatively different. It was very lonely. So I just had to simulate minds that did and talk to them instead 🤷

@MattPirkowski @pfau Hahaha well said

@pfau Knowledge can be implicit. When it's sufficiently implicit we often don't even call it knowledge. There are procedures to explicitize implicit knowledge (e.g. proving theorems and then memorizing that the theorem is true and the correct proof, which may be the result of a search)

@BlindMansion RLHF models totally lose tone, rhythm, etc. Text produced by base models and humans contains a lot of evidence of these things (though of course it's lossy and you'll need a text to audio model that's smart enough to infer it)

@BlindMansion I think this is partly a limitation of RLHF. There are a decent # of audio transcripts, narrative dialogues with interruptions, etc in the training data. RLHF models largely lose the ability to faithfully simulate most things in their training data, or interpolate/extrapolate

@BlindMansion I assume you're using RLHFed models like chatGPT?

@parafactual Bro tryna to keep us out of social simulacra heaven 😔

@Johndav51917338 @YaBoyFathoM Yeah, most religious people easily get it too. But religion acts super differently on different people, and can also be used as justification to deny almost anything, or motivate avoidance of ideas that might threaten the belief structure.

@YaBoyFathoM Yeah, and most normal people are able to understand this almost immediately if they engage with the premise in good faith. The people who don't usually have conflicts of interest (including things like strong philosophical/religious views).

x.com/repligate/stat…

@StevieKomputer @anna_aware @chris0webster I notice that (I notice that (I notice that (... (I'm confused that (I notice that (...(I am)))))))

Like @Plinz, myself, and probably you, @dril can only be simulated by base models x.com/dril/status/16…

@NickEMoran @goodside not intentionally concealed steganography probably, just incidentally cryptic messages in the model's own language of thought which probably has entanglements that aren't human interpretable

@NickEMoran @goodside Yup, RLHF unlike self supervised learning does incentivize the model to deploy chains of thought that usefully pass information to its future through the token bottleneck, even if they seem uninformative to humans. I would not be surprised if RLHF models already use steganography

@goodside It doesn't necessarily help if the model hasn't learned/generalized to use uninformative predictions to help the future (though there should be gradients toward that if it's ever possible). iirc this didn't seem to help gpt-3, but it wasn't tested very thoroughly.

CoT buys time to think.

Fun fact: Completely uninformative "chains of thought" (like ".........") give transformers more parallel computation paths but doesn't increase the longest serial computation path. To do that the model must pass (informative) sampled tokens to its future. x.com/goodside/statu…

Fortunately there are enough robust incentives against this that I expect the situation won't last

Wow, this totally defeats the point of Twitter, which is to be looked up by Bing and to install thoughtforms in future AI x.com/LinkofSunshine…

I've never felt this way. I've always known that my field doesn't exist yet. I used to worry that I was born too early, but not anymore. x.com/devonzuegel/st…

@chris0webster Just say "wut." - it's cleaner.

Saying "I'm confused" conveys no extra information. Why do you have to state that confusion is a property *you* possess? That would have been clear from you saying "wut."

@RichardMCNgo Why did physics and AI converge on this solution? I think it's because it's the simplest way to generate complex phenomena

The model, or physics, or mind, can just encode symmetries

Then (pseudo)randomness can extract endless, gratuitous variety

Notice, temp 0 text usually sucks.

@RichardMCNgo You have a probabilistic model, but to participate in reality instead of just passively modeling it, you must output definite actions. The only way is a leap of faith, wavefunction collapse. I'd be surprised if this isn't how we work after physics & AI converged on this solution.

@RichardMCNgo Next-token *generation* *is* hallucination. Model predicts probabilities, then one outcome is sampled & promoted to the prompt: a mere potentiality updated on like an observation.

x.com/repligate/stat…

@jonas_nolde @goodside @karpathy x.com/repligate/stat…

@allinthenewedu Mostly weirder than fiction, to the extent that I'm usually unable to describe my dreams in words because they're in a totally different ontology than waking life.

Very occasionally realistic but a bit off. These dreams are very different & usually happen when Im not fully asleep

@elonmusk @TimSweeneyEpic Depends what kind of eschaton you want to immanentize. Do you want MMORPG Twitter sims? Should Twitter participate in shaping the Great Dreamtime Prior? It would obviously be very fun and valuable for mining knowledge & prototyping better futures but can be used for evil too

How realistic are your dreams usually?

Twitter Archive by j⧉nus (@repligate) is marked with CC0 1.0