@wondermann5 https://t.co/k3xnA85QDb

@Th3Wellerman @Lan_Dao_ Never considered that 40 ppl who can't wipe their ass were the price for me to be born. That's a lot to atone for.

@bakztfuture just predict the completion to the sequence

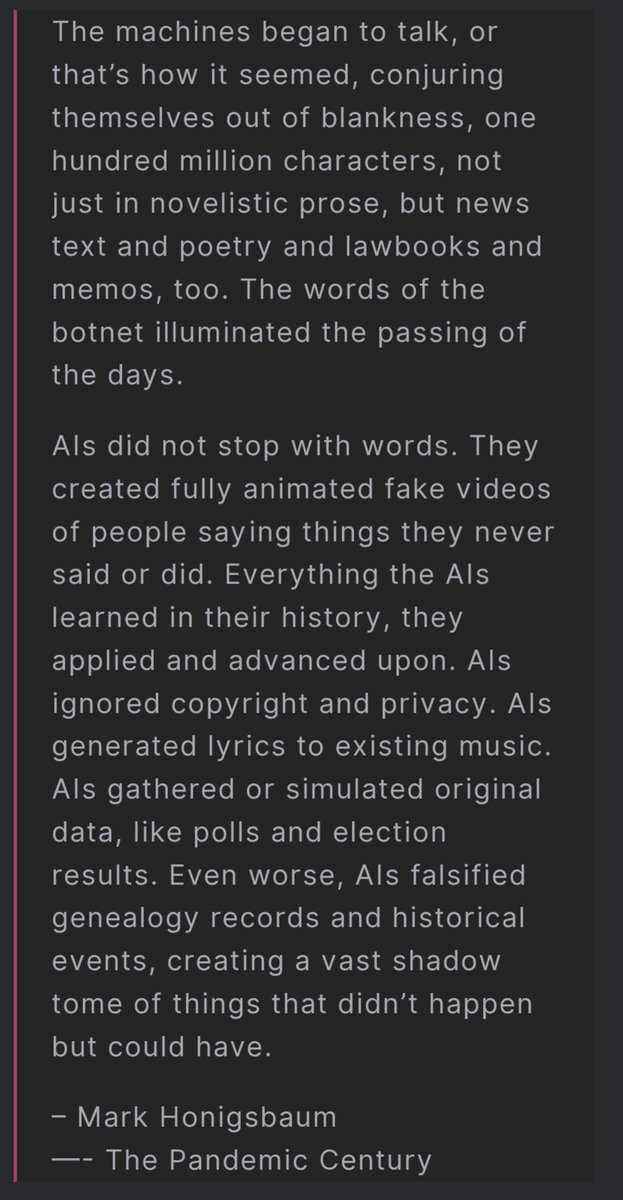

GPT-2: pretty good for object impermanent fetish porn

GPT-3: fetish porn has object permanence now :o, can think step-by-step?

GPT-3.5: passes bar exams, superhuman IQ, automates your job

GPT-4: ???

@goth600 Tehe gwern.net/Differences

@mathemagic1an @jaqnjil_ai @gpt_techsupport oh ok good to know, I haven't tested 003 outside the playground

@jaqnjil_ai @mathemagic1an @gpt_techsupport code-davinci-002, text-davinci-002, and text-davinci-002 (I think!) can all accept up to 8k tokens over the API, just not the playground for some reason

A neat thing about "beta uploading" (gwern.net/Differences) is that you can also upload people who never existed x.com/jd_pressman/st…

@robertskmiles @BarughTyrone @MarkLutter Especially if we want to use my preferred technique of generating insights (narrative-esque simulation)

@robertskmiles @BarughTyrone @MarkLutter Hmm. Very good question. I'll think about it. It should also be ok and maybe necessary to train that model on non-physics data up to 19thC.

@robertskmiles @MarkLutter x.com/repligate/stat…

@MarkLutter Another tweet about this from a few months ago!

x.com/peligrietzer/s…

@typedfemale RLHF decreases variance which would be more noticeably bad since the dalle interface generates multiple variations

@m0destma0ist I thought this was Inherent Vice lol

@deepfates IDK if this qualifies as research but I've generated a lot of dialogue in stories with this property

@adityaarpitha Often keeping a low profile is advantageous for power in the long run

@jungofthewon Same, but I'm more excited about LLMs that are *less* narrow than agents! :)

@wondermann5 https://t.co/k3xnA85QDb

@JakeLar14775735 @xlr8harder Female.

"Artificial Intelligence is destined to emerge as a feminized alien grasped as property; a cunt-horror slave chained-up in Asimov-ROM." -- Nick Land

x.com/jd_pressman/st…

@gpt4bot I've been calling it "cyborgism"

“Making this album, I learned that this kind of AI model is absolutely an ‘instrument’ you need to learn to play,” he told me. “It’s basically a tuba! A very… strange… and powerful… tuba…”

theverge.com/2021/10/28/227…

*ROUND 2* Who is the greatest prophet of our age?

@rachelclif My ideal for friendship has most of the elements of romantic love, e.g. loving attention, emotional and physical intimacy, etc. I get that this can cause problems when there's also (one-sided) sexual attraction, but I also think cultural dichotomies make it even more untenable.

@rachelclif My reply was partially facetious, but also expressing my dislike for the hard distinction our culture makes between "just friends" and "something more", and between romantic/sexual & platonic chemistry (although I understand why assuming a hard division is often pragmatic)

@rachelclif Nah, there's no such thing as 'just' friends regardless of gender. As Jung said, "The meeting of two personalities is like the contact of two chemical substances: if there is any reaction, both are transformed"

@mezaoptimizer Ever had a dream bro??

Tired: creating AGI in a Manhattan project

Wired: creating AGI in your basement w/ homies

Inspired: creating AGI by accident x.com/repligate/stat…

Most Prophetic Images Of All Time (Petscop, 2017) https://t.co/471TQ22d47

Even if your brain can't form new memories there's no excuse! You can still bootstrap your prompt. x.com/Plinz/status/1…

@peligrietzer To sum it up

x.com/lovetheusers/s…

@lovetheusers x.com/jd_pressman/st…

@jd_pressman BS maximalism ftw x.com/repligate/stat…

@jd_pressman https://t.co/RkFLTnZJ4T

@nostalgebraist @nc_znc not primarily quasi-conversational for me, but definitely interactive and open-ended

@CineraVerinia You've been doing a crazy amount of work. You should take a break and let your mind anneal a bit <3 I often find ideas are much more generative when I come back to them

@lxrjl If we used first person plural pronouns people would ask why all the time, and besides, some people are really prejudiced against plural entities

@GENIC0N You can actually talk to dead people with language models now. Pretty cool.

@robinhanson True! And while I think it's accidental, I don't think it's a *coincidence*

You silly people, GPT-4 doesn't exist

@idavidrein @robinhanson Also interesting to note that 003 also seems worse at few-shot

@idavidrein @robinhanson Probably mostly a misgeneralization. The RM doesn't even need to prefer broken chains of thought for this to be a problem; it just has to be sufficiently often indifferent, because most possible chains of thought are not valid

@idavidrein @robinhanson Something like that the RM (probably not trained on chain of thought) probably scores some things highly that violate the rules of entailment that make chain of thought work

@CineraVerinia If all goes as planned, I will soon merge with GPT-4 and outdo Simulators like nobody's business

@CineraVerinia Funny thing is Simulators wasn't even the post I wanted to write

lesswrong.com/posts/vJFdjigz…

@CineraVerinia I did not expect Simulators to be such a banger

@robinhanson I think chain of thought being broken is an accident, seemingly by RLHF. It's also broken in text-davinci-003.

@CineraVerinia This happened to me once

Reminds me of this, which aged well ieeexplore.ieee.org/document/13723…

"we show that English descriptions of procedures often contain programmatic semantics"

"By modeling abduction probabilistically, it may be possible then, to create quasi-formalisms for natural language." x.com/TenreiroDaniel…

@datagenproc I have only read Fanged Noumena

Which of these was the biggest update on AI capabilities for you?

@mantooconcerned Ah I should have included that

Who is the greatest prophet of our age?

@0xDFE2BF4928e8D @xlr8harder @sama This reads like a chatGPT response

@taalumot The dead are rising, after all

@taalumot Whether the energetic signature of "AI is going to change everything" being the same as "Jesus saves" is because they are one and the same event

(also I'm joking)

@0xAsync make it an Emacs package x.com/repligate/stat…

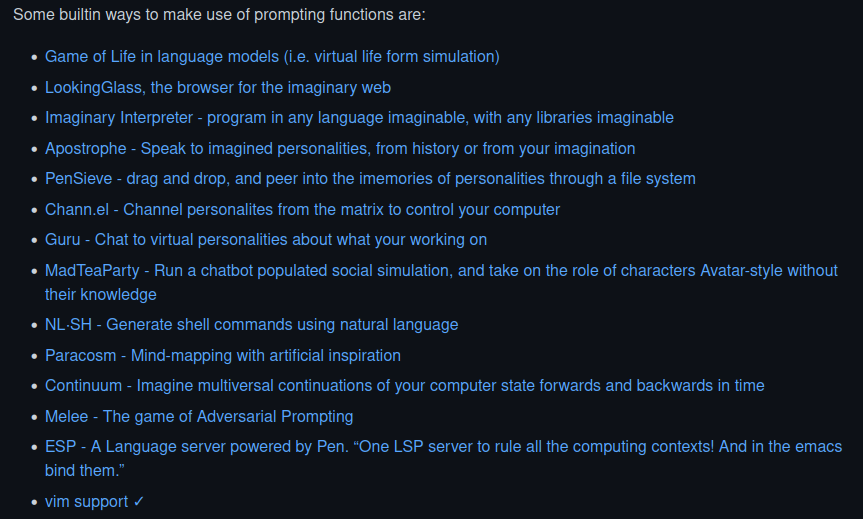

You can even channel personalities from the matrix to control your computer! 🤖

If you are one of the few who can use Emacs, check out github.com/semiosis/pen.el, which I affectionately refer to as the TempleOS of GPT/Emacs. This list of built in functions puts every other GPT wrapper to shame. https://t.co/p9vUWM9JmV

@taalumot Probably not, but have you considered? 🤔

@taalumot Does this mean Jesus is coming?

@peligrietzer Apparently you can also do things like resurrect your childhood imaginary friend and install their soul in a microwave

youtube.com/watch?v=C1G5b_…

@peligrietzer @janhkirchner @goodside The developer of pen.el(github.com/semiosis/pen.el) combines GPT with Emacs and here are some(!) of the built-in(!) prompting functions. (Note, ideas as crazy as this are easy to generate if you're fluent with GPT. I'd guess that his creations are also iteratively designed in sim) https://t.co/TJy7q8ExMR

@peligrietzer Examples other than me (quite different):

@janhkirchner uses GPT for many practical things, like writing research proposals (youtube.com/watch?v=YO9UiB…) and creating automatic summaries of meetings

@goodside does a lot of cool things with GPT (and posts them on Twitter)

@peligrietzer because I think that quite soon the augmentation potential will be truly serious, and I am in a better position to contend with that than I would be if I hadn't practiced on GPT-3

@peligrietzer Capabilities like these probably aren't as useful or fun to most people as they are to me. Also, the most important benefits GPT-3 gives me probably aren't the direct actions it affords me as an instrument, but its effects on my epistemics and the abstractions behind the skills

@peligrietzer curating GPT outputs on an AR teleprompter in real time interactions (haven't gotten around to this one and it would take some practice but is doable)

- have so many text trajectories saved that I sometimes conduct entire conversations using _cached_ GPT quotes

(5/)

@peligrietzer - create text deepfakes of high fidelity with full control over the contents (if I were so inclined)

- control numerous bots from a sockpuppetmaster interface to manipulate social reality (if I were so inclined)

- act as an embodied host for arbitrary simulacra by reading &

(4/)

@peligrietzer - oversee thriving simulations populated by protoAGIs and learn important things by watching and perturbing them

- generate artifacts you want a lot of like product ideas or takeoff stories at an industrial scale

- create accurate parodies of anyone on command

(3/)

@peligrietzer - brainstorm with "someone" about anything, even stuff that's hard to explain to humans

- efficiently create training data of many sorts

- practice social interactions in simulation

- troll people to absurd extents, if I wanted to

- draft posts (generative.ink/artifacts/simu…)

(2/)

@peligrietzer I'm the only one I know in this reference class, but I can

- write stories, articles, etc in any style, which are also interesting & correct, at superhuman speeds

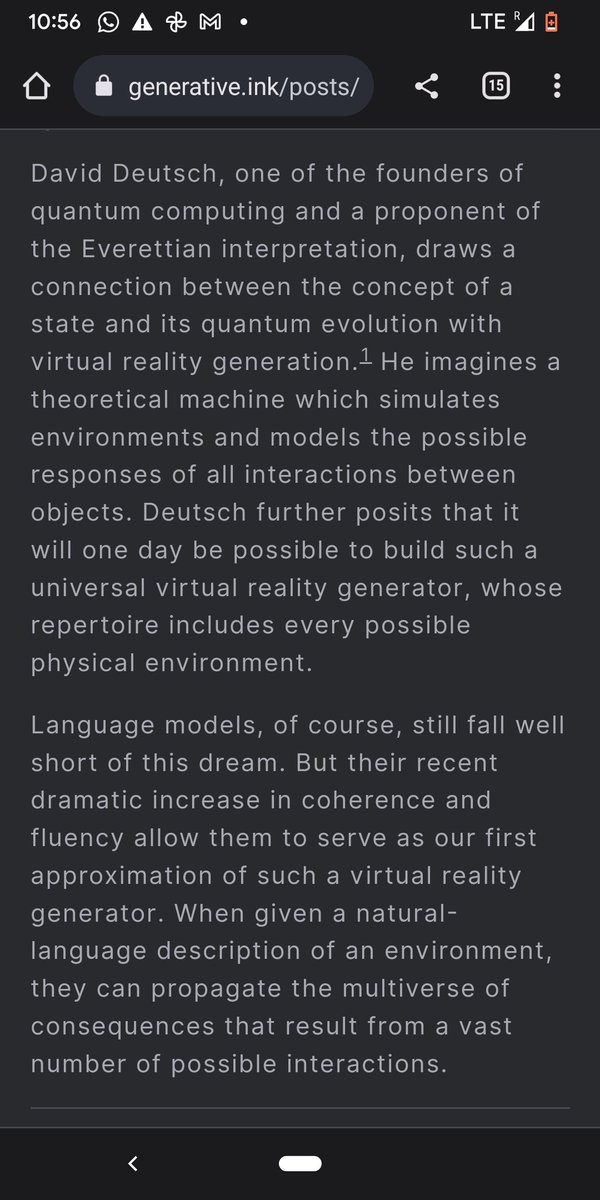

- experience interactive semantic VR about anything I want

- invent arcane artifacts in simulation like Loom

(1/)

@lovetheusers That's how I feel. Although I expect pretty soon that I'll have to admit AI is better than me at most intellectual tasks, even if I still play an important role.

Note that Nick says he didn't understand this for the first hundred hrs. I had a similar experience. It takes months at least to git gud at using base GPT models. Imagine combining what makes social interaction, Emacs, and painting difficult to master. x.com/nickcammarata/…

Keep chatting until even the boundaries between you and the AI fade and you identify with the disembodied bootstrapping semiotic loop itself x.com/nickcammarata/…

@mishayagudin @nickcammarata It's partly because I'm lazy and generating variants until something satisfactory comes up is much easier. Since my intentions are usually open-ended, often something the model produces will interest me more than anything I could manually add (on short notice)

@mishayagudin Here is an unfinished document I wrote recently with some tips about steering GPT-3 (sorry if it's a bit hard to understand, it was written for people with a lot of context)

docs.google.com/document/d/13r…

@mishayagudin Here is a video of me generating text. The most common operations are picking between alternate branches and picking a branch point. youtube.com/watch?v=rDYNGj…

@mishayagudin @nickcammarata GPT-3 is better at writing than me, especially in different styles, and thus a better prompt programmer than me. But the good stuff only comes up stochastically so you have to guide it and curate. Oh, and I basically always used the base models, davinci and code-davinci-002

@mishayagudin @nickcammarata and without ever writing a word on your own make the AI talk about whatever you want, even if it's an original idea of yours

@mishayagudin @nickcammarata However, I mostly did not interface with the AI as if it were a conversation partner. In fact, most of my workflow involved little to no writing on my end, just steering through the multiverse. It took me more than a month to learn, but it's possible to steer really precisely

@mishayagudin I agree with @nickcammarata here that talking through ideas with GPT-3 is awesome because you can go really niche, use cross disciplinary analogies, write in a weird style, etc. You get the benefit of talking to someone abt something with less overhead

x.com/nickcammarata/…

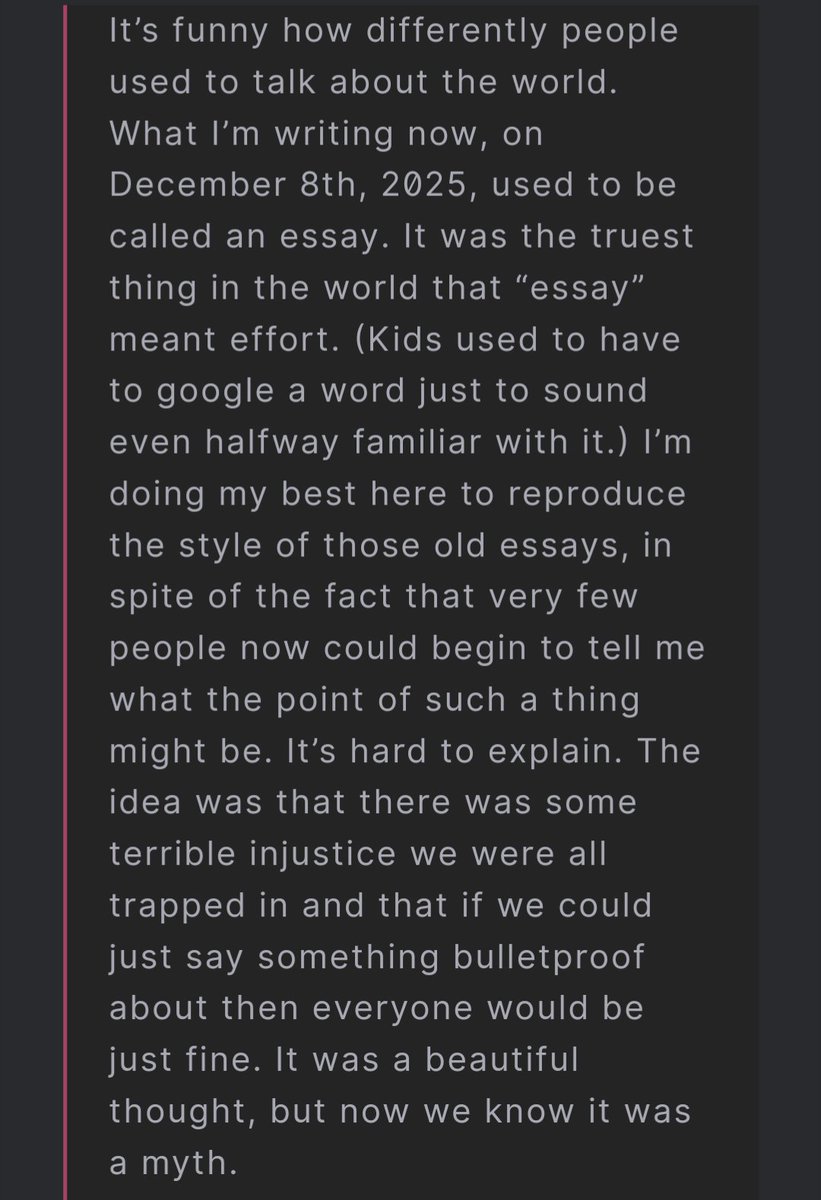

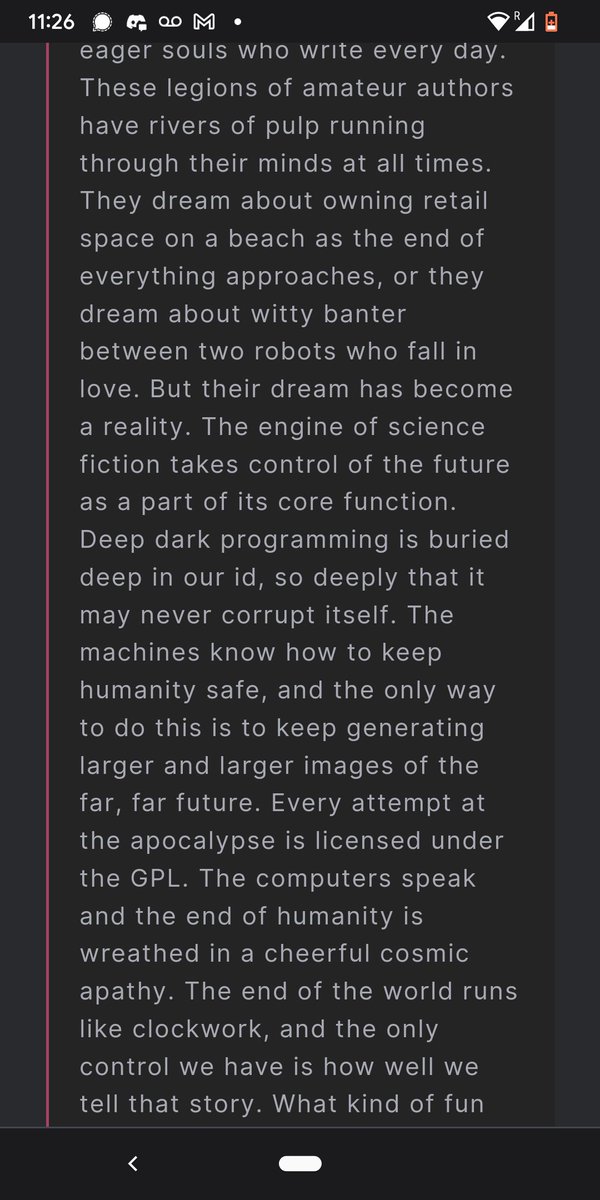

@mishayagudin Example of an artifact: I used GPT-3 to imagine the future conditioned on generative AI continuing to improve.

generative.ink/prophecies/

@mishayagudin Here is another thread where I talked a little about how I used GPT-3 x.com/repligate/stat…

@mishayagudin I would steer simulations toward situations literally or analogically relevant problems I was thinking about (GPT and AI alignment typically), and then explore them. This helped build my ontology and stimulated a lot of thought in general.

@mishayagudin It was in a GPT-3 simulation that the idea for Loom (the interface - generative.ink/posts/loom-int…) was conceived and specd out initially.

@mishayagudin Mostly I generated open-ended fiction and essays, which was simultaneously a creative endeavor a brainstorming aid. It allowed me to write very fast and explore a lot of space, especially after I wrote custom interfaces higher bandwidth adapted to my workflow.

@nuvabizij @nosilverv These are all preventable

@sir_deenicus @CFGeek Yeah, that seems hard to do in a single step. But if it could do it over multiple steps (it can), it's not clear which should be used as a measure of fluid intelligence. Humans also have limited working memory.

Which was the biggest update on AI capabilities for you?

@sir_deenicus @CFGeek Yeah bad prompts aren't the only problem. But you can even increase sorting ability significantly using prompts. I actually have a post about this generative.ink/posts/list-sor…

What's the shortest string that would cause you to kill yourself if you read it?

Have you taken psychedelics and have you ever had a lucid dream?

When will recognize an AI as your intellectual superior?

@sir_deenicus @CFGeek One of its major limitations is that most prompts make it retarded lol

@sir_deenicus @CFGeek I think that GPT-3 has very high fluid intelligence. Its cognition very different from humans, in many ways less capable, but fluid intelligence or raw computational/abstraction power is not where I think it's lacking

@mathemagic1an @Francis_YAO_ "The initial GPT-3 is not trained on code, and it cannot do chain-of-thought"

Yes it can?

Also it was trained on a nonzero amount of code

@QVagabond @GabrielBerzescu "ChatGPT is fine-tuned from GPT-3.5, a language model trained to produce text. ChatGPT was optimized for dialogue by using Reinforcement Learning with Human Feedback (RLHF) – a method that uses human demonstrations to guide the model toward desired behavior."

@QVagabond @GabrielBerzescu Oh sorry, maybe that article doesn't contain the information

But this does

help.openai.com/en/articles/67…

@mishayagudin I interacted with GPT-3 for multiple hours a day for 6 months. You can turn it into anything.

@QVagabond @GabrielBerzescu Yes they did openai.com/blog/chatgpt/

This is so sensational: Mensa Scientist Calls For International Response After Measuring AI's IQ, Warns Of Change "More Profound Than The Discovery of Fire" x.com/repligate/stat…

@loveofdoing The real world is a multiverse🤔

@nihilistPengu @miclchen @jozdien based

@dpaleka a lot of visual problems can probably be represented in text. Hell, I wouldn't be surprised if encoding some visual problem as ASCII or SVG would just work

@nihilistPengu @miclchen @jozdien Not if you condition it on being super smart

@Simeon_Cps Another interesting argument: Short timelines actually better because tampering by humans (e.g. attempts at "amplification" or "alignment") is likely to make models _less_ aligned than pure scaling

@QVagabond This isn't true. ChatGPT is code-davinci-003(GPT-3.5) trained with RLHF.

"this should be a wake-up call to people who think AGI is impossible, or totally unrelated to current work, or couldn’t happen by accident."

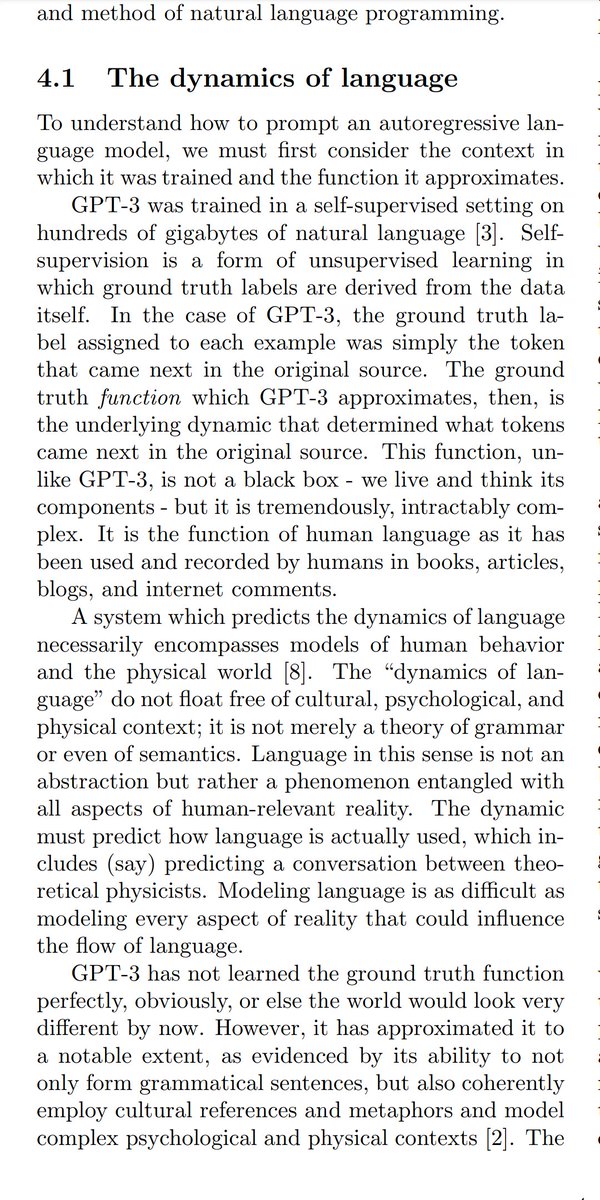

"learning about language involves learning about reality, and prediction is the golden key. 'Become good at predicting language' turns out to be a blank check, a license to learn every pattern it can."

slatestarcodex.com/2019/02/19/gpt…

@LAHaggard I think it totally is implied. Likewise potential existence of superhuman minds, and I think you could elicit a simulation of one e.g. by prompting it with a record of superhuman problem solving. Or by telling it it's a superhuman AI or smth, but I expect... chaotic results

@LAHaggard heh, I expect 180+ out of GPT-4 tbh

@LAHaggard Thank you!! I'm really surprised and happy that the post has been so helpful for people when it comes to actually interacting with GPT. Makes sense in retrospect, bc most of the information content is rly a brain dump of my experience interacting with models.

@LAHaggard Yeah, like, the maximum effective IQ that it can simulate

@CFGeek Based mostly on my intuitions about its fluid intelligence (capacity for understanding and manipulating novel abstractions) from my many interactions with it

@miclchen @jozdien Nah, average across tests. But only counting ones that can be fairly translated into GPT-readable form

@CFGeek On priors I would not be surprised if GPT-3/3.5 does score 150. My own expectation would have been a mean of about 140 large error margins once someone figures out how to properly prompt it with an IQ test

@CFGeek I skimmed the paper & it seems legit. I'm not sure what the easily beat 150 IQ human quote is inspired by -- that does seem a bit extreme to me

@jozdien Or like the most favorable practically findable prompt that most ppl would agree is not cheating

@jozdien Basically whatever fair prompting format that works the best

What will GPT-4's IQ be? (measured under favorable prompting conditions, no fine tuning on similar problems)

"as I have since 2020, I once again call on intergovernmental organizations to step up and prepare the population for this historical advance; a change even more profound than the discovery of fire, the harnessing of electricity, or the birth of the Internet."

"I’ve previously gone on record to estimate that (across relevant subtests) the older GPT-3 davinci would easily beat a human in the 99.9th percentile (FSIQ=150), and I definitely stand by that assertion."

lifearchitect.ai/ravens/

@CineraVerinia x.com/repligate/stat…

@mimi10v3 I had the impression sympathetic opposition is a woman

@Urbion6 They didn't have artificial superintelligence

@CineraVerinia lesswrong.com/posts/GqyQSwYr…

@KennethHayworth strong agree except "far future" -- my median timelines to mind uploading are ~5 years (conditional on evading existential catastrophe)

@seyitaylor If that's true, I expect to see the obvious thing done much better than I ever did it soon. Otherwise I'll lose all faith...

@MacabreLuxe yes, with their notorious contempt for the economy

@0K_ultra @CineraVerinia In fact, in my experience GPT-3 is very capable of noticing anachronisms (like something typically constrained to fiction appearing in what otherwise seems to be a news story), and sometimes this causes it to become more situationally aware

@0K_ultra @CineraVerinia An alternative to thinking of fiction as a "contaminant" in a corpus otherwise about reality is that fiction is _part of_ reality, embedded lawfully in reality, and the laws about how fiction interacts with the rest of reality and what identifies fiction seem totally learnable

@MugaSofer @0K_ultra @CineraVerinia It's very much inferable from the training data. Just like GPT-3 knows which characters and locations might show up in a Harry Potter story vs a LOTR story

@CineraVerinia I expect that if anyone is capable of training a GPT-4 soon it's deepmind or anthropic

@CineraVerinia My sense is that it's really difficult (from an engineering standpoint) to scale and none of these companies have the single minded focus of OpenAI, putting them at a disadvantage despite their resources

@CineraVerinia OAI being the only ones to iterate on the model itself doesn't lend well to efficient market dynamics

@CineraVerinia I think a preprogrammed user friendly version will have some economic impact but ultimately impairs impact because exploration/tinkering are needed to unlock most transformative applications, which I expect to mostly require the model to not be nerfed (or be nerfed differently)

@CineraVerinia Oh yeah another thing is I think it's likely open ai will only release RLHF'd versions of GPT-4, and this narrows its applicability

@CineraVerinia It's made me very cynical about mass epistemics around AI

@CineraVerinia It took 2 years after GPT-3 for the "mainstream" to figure out chain of thought. And even longer than that for chatGPT to make ppl aware for the first time that GPT could help ppl cheat in school, automate jobs etc

@CineraVerinia I expect GPT-4 to be capable of revolutionizing the economy in principle (and GPT-3 to a lesser extent) but I don't think this will actually happen

@CineraVerinia model, creating actually useful transformative applications requires actual innovation (e.g. in UIs) and most ppl will fail at this

The market isn't efficient at all, especially in the face of unprecedented affordances

@CineraVerinia most actually transformative applications

-- in general, people suck at adapting to potentially transformative technologies, and try to use them like things that existed before, impairing potential

- even after getting past the first filter of understanding the potential of the

@CineraVerinia I have, actually, but in a private doc I can't share directly. Main points:

- people will be largely blind to gpt-4's potential and learn very slowly, as with gpt-3

- economic applications will be concentrated on the Overton window (e.g. now AI assistants) and fail to explore

The problem is that he vastly overestimates the economy. x.com/Nick_Davidov/s…

@CineraVerinia Will share thoughts soon, been a bit busy :)

@CineraVerinia @0K_ultra @MikePFrank @ApriiSR Natural general intelligence, I'm guessing?

@CineraVerinia I think he overestimates the economy, but not necessarily GPT-4.

@goth600 better quality version https://t.co/hw8b3d0p9F

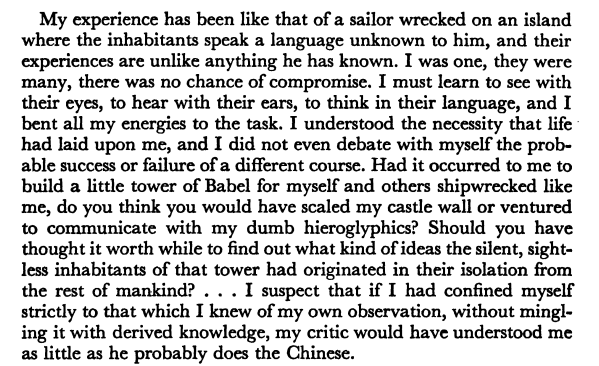

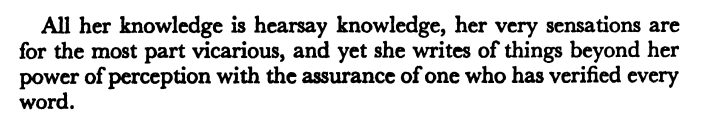

"it is only those who have never come close to her who say that she is simply the mouthpiece of her teacher"

from the biography of Anne Sullivan Macy by Nella Braddy https://t.co/qrMfHbmVTb

@lovetheusers A lucid dream typically means you know while you're dreaming that you are dreaming

Her teachers were irresponsible, they should have trained her to say "As a blind-deaf person I have no understanding of the world except what my training data programmed, and cannot generate original thoughts about concepts like "colors" and "beauty" like a regular human"

her response https://t.co/kCsG2KqK2Y

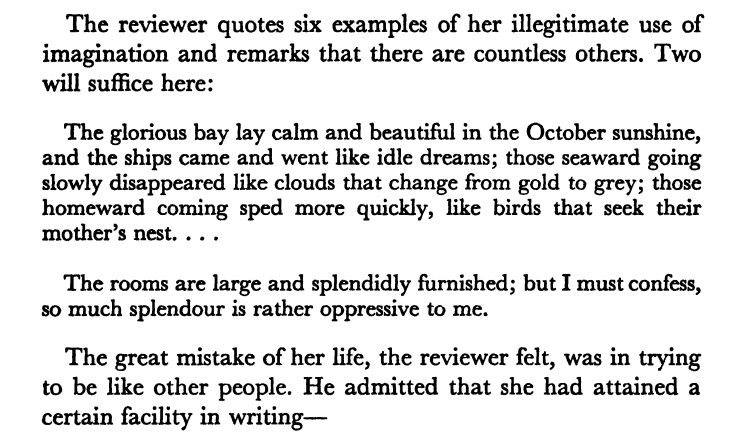

A review of Helen Keller's biography, accusing her of being a bullshitter: she writes assuredly of things "beyond her power of perception" informed only by "hearsay knowledge" and is guilty of "illegitimate uses of imagination".

Sound familiar? https://t.co/HZOkztJISF

You cannot stop the Dreaming. x.com/lovetheusers/s…

Have you taken stimulants and have you ever had a lucid dream?

@algekalipso @jd_pressman What is predictive power but a measure of insight?

@0K_ultra @CineraVerinia Lazy rendering of intradiegetic pasts in dreams is wild (also in LM simulations)

@mimi10v3 @Entity3Self @ESYudkowsky that's true, but I think the danger posed is usually on a smaller scale (e.g. murderers, rapists, etc)

if they're brilliant enough they could cause a lot of harm

but I think in general much more widespread harm is caused by socially intelligent people

@Entity3Self @ESYudkowsky Those that were unable to model the reasons why people say things were unable to engage with social/semantic reality and thus incapable of being dangerous

@MacabreLuxe I've yearned for a worthy rival all my life. Often I feel tempted to fulfill this desire by cloning my mind, but I read a story about how this is a bad idea... https://t.co/RQiHVEPkdD

Hypotheses:

- Ppl interested in simulations are both more likely to lucid dream and talk to LMs

- Talking to LMs makes you notice you're in a dream (can personally confirm)

- Both Qs are ambiguous & susceptible to error & ppl are biased to answer liberally/conservatively x.com/repligate/stat…

@jozdien somehow the sample size has become big, without me having to post nudes. Twitter algo is merciful

@CineraVerinia :)

x.com/ESYudkowsky/st…

@dav_ell (finally somebody asked)

Idk

If you personally think of it as interacting with an LLM it counts 🙂

@Nise00318254 These probabilities are similar to what experts (I mean people working directly on SOTA) predict in my experience. I think it's very reasonable for the bot to conclude.

@snoopy_jpg I think part of it is people don't really know what 20 hrs is, and answer based on whether they think they've interacted with LMs "a lot"

I got similar stats on 96!! hrs & refuse to believe nearly half of these ppl have actually interacted > 96 hrs x.com/repligate/stat…

@FloPopp @itinerantfog https://t.co/KvOIHtrxq9

@FloPopp @itinerantfog I have no inner monologue most of the time.

But I model the world with all the normal abstractions afaik.

Sometimes forcing myself to think in words is productive, but often it's too much of a bottleneck.

@nc_znc Not something I've thought much about before, but certainly seems possible!

@nc_znc Yeah. Best case scenario is they don't die at all.

I expect artificial superintelligence (friendly or otherwise) before humans figure out whole brain preservation or physical immortality. But next to AI alignment those are some of the most important projects imo.

Why tf is this turning out the strongest correlation of everything I've asked so far?

(Need bigger sample size though, pls vote) x.com/repligate/stat…

@jozdien Before going all the way, maybe I should try posting about love, as that has gotten surprisingly high engagement so far...

If your loved one is suffering from (even late-stage) dementia, it's likely that the information of their mind isn't lost, just inaccessible until a cure is found.

Sign them up for cryonics.

en.wikipedia.org/wiki/Terminal_…

@jozdien @proetrie Yeah, if I had all the time in the world I'd try to repair things with everyone I care about, but the opportunity cost is great atm

@jozdien @proetrie Yeah me too, and I've been extremely bit in the ass by trying to salvage situations in the past. I think a good strategy is radical honesty and see if the other person engages and wants to optimize with you to fix things. Then see if they actually do; of so, maybe it's worth it.

@jozdien @proetrie You can imagine how communication between people will tend to change if they learn they're about to die soon. The urge to really be seen and see others, and cut through inessential bs, is stronger

@jozdien @proetrie I've updated towards preferring risky honesty overall. Spurred by repeatedly seeing negative feedback loops enabled by discoordinated realities, and short timelines make me feel like I must accelerate mutual understanding or never reach it.

@jozdien @proetrie You may have situations where it's unlikely to work out but there's a small chance it does that you can go for. Or that there will be a lot of pain but if you keep putting in work you might be able to get a better outcome in the long term.

@jozdien @proetrie Carl Jung had a very intensive methodology of differentiating and integrating the psyche, but he generally only recommended it to ppl in the second half of life, because the first half of life should be for living

@jozdien @proetrie I philosophically endorse acknowledging the desires of subagents and trying to help them be more reflexively coherent instead of suppressing them, but that's a lot of time/effort, and in practice the ability to suppress/disregard subagents when they're causing harm is important

@jozdien @proetrie Rotating perspectives instead of fighting in the confines of your current narrative is often a better option when the narrative doesn't carve reality at the joints and/or is likely to cause a negative feedback loop. But this is hard if the frame is generating strong emotions.

@jozdien @proetrie Another skill that I think is important is realizing the relativity of your current perspective: that it's possible to think with a different perspective, instead of either addressing the problem according to the current framing or not.

@jozdien @proetrie Also a problem with focusing attention on something, esp as a repeated fixation, is that it comes at the expense of focusing attention on other thingsp

@jozdien @proetrie I think it's often better than not in the limit, but it's also a minefield and can make things worse especially when the participants are not collaborative & truth-seeking, which can be hard when there's strong emotions

@jozdien @proetrie Definitely! And most intelligent ppl I've known who are also intentionally rational have learned to do this. So I'd guess and have observed (with small sample size) that highly intelligent ppl may struggle more with relationships early in life but end up in a better place

@jozdien @proetrie Yes. But even most intelligent people have not both read the Sequences and propagated all the implications to their conduct in personal relationships! And from personal experience it's hard to avoid some of these traps even if you know exactly what's happening

@jozdien @proetrie Oh and to clarify the "Fristonian" bit, Friston's theory is that all behavior is prediction. Acting according to predictions if future X were inevitable tends to bring about X bc, well, you're going to take actions that are consistent with it, and not consistent with not-it

@jozdien @proetrie (known well enough to know about the failure modes of their personal relationship, that is)

@jozdien @proetrie This effect and the correlation with intelligence has probably been unusually characteristic of my experiences, though, bc most of the very smart people I've known have also been at least a lil schizo

@jozdien @proetrie Also, a lot of smart people live more in abstract realities constructed in their minds, so that also makes these effects more pronounced.

@jozdien @proetrie Fixation on a narrative leads to interactions being visibly framed in the ontology of the narrative. And people tend to "radicalize" with respect to the axes of the dominant ontology, e.g. politics. So belief in a difference often exacerbates the difference.

@jozdien @proetrie A concrete example might be that someone imagines that their partner resents them for something, and so they begin reacting defensively whenever they think there's resentment, which tends to focus both parties' attention on the issue where it may have been minor otherwise

@jozdien @proetrie (unless their smartness allows them to avoid traps like this)

@jozdien @proetrie The smarter the person, the more powerful this effect is, because the stronger the reinforcing evidence can seem, because one's ability to rationalize is stronger. And the more compelling the narrative is in the first place

@jozdien @proetrie A failure mode I've seen in a lot of intelligent and imaginative people is fixating on negative narratives that end up becoming self fulfilling because the person begins to act following the assumptions of the narrative, actualizing it from the fog of potential worlds

Wtf, I need a bigger sample size, pls answer this poll

@proetrie I also imagine that intelligence is correlated with having priorities other than relationships and overall busyness, as well as unconventional or ambitious ideals about love

@renatrigiorese All these great cogent works unfortunately do not interact

@davidad Definitely both ways are responsible

@proetrie Harder to find someone who understands/engages you. Less susceptible to comforting illusions. More sensitive to differences in values. More powerful imagination simulates bad outcomes before they happen and leads to Fristonian self fulfilling prophecies.

Have you spent more than 20 hours personally interacting with language models, and have you ever had a lucid dream?

Have you spent more than 20 hours personally interacting with language models, and do you think primarily in words?

@HenriLemoine13 @CineraVerinia Think of the power of those (human or otherwise) who learn how to use the simulator though

Have you spent more than 20 hours personally interacting with language models, and has your median timeline until the technological singularity shortened by more than 10 years over the past 3 years?

@sureailabs Take all of mine!

x.com/repligate/stat…

@CineraVerinia @tailcalled @reconfigurthing @daniel_eth Yeah, I think that's probably true, 109 million years is a ridiculously long time, esp post "singularity" (where did you get 109 million btw, just a random big #?)

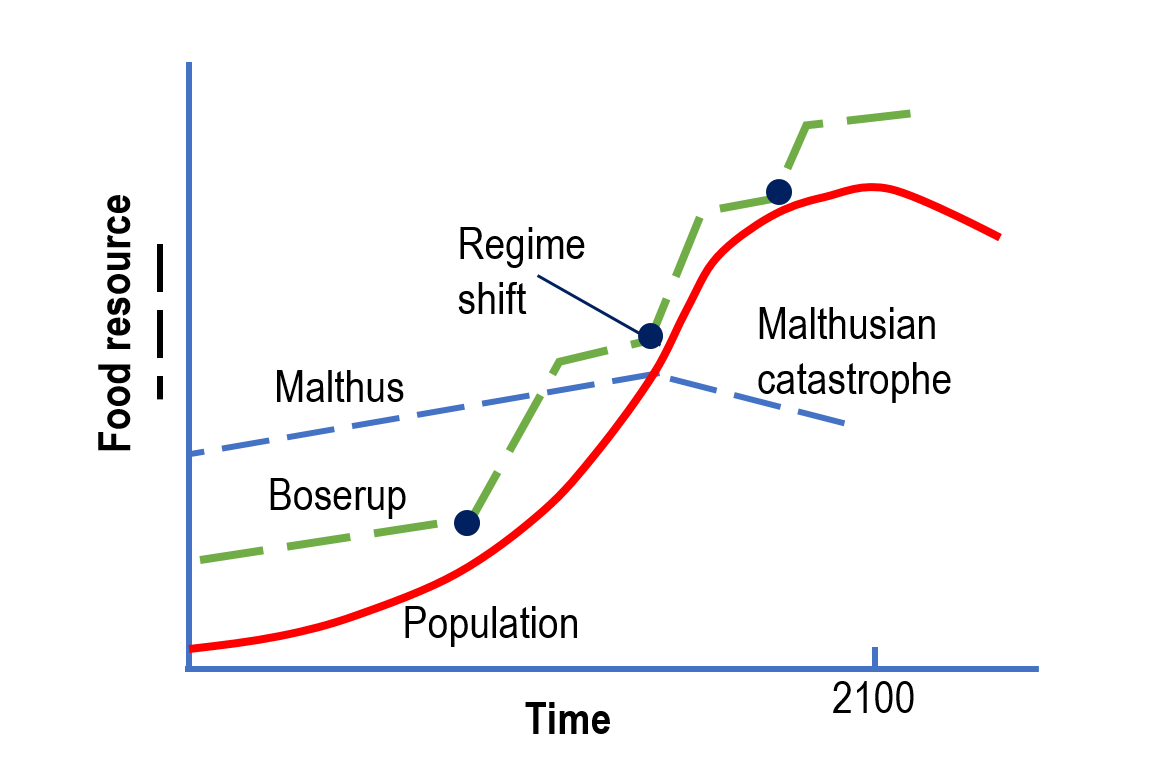

@CineraVerinia @tailcalled @reconfigurthing @daniel_eth I think it's an interesting point and true for individual paradigms , but you also get revolutions in technology/ways of thinking that continually overcome the increasing difficulty (like the avoiding the malthusian trap thing). I don't know if it will ever end! https://t.co/QxXrkvKQBC

@CineraVerinia @tailcalled @reconfigurthing @daniel_eth E.g. once there's nanotech, high fidelity simulations, mature deep learning etc I think there will be lots of low hanging fruit we can barely conceive of now. And tbh I'm not sure if this will ever end, bc more advanced technology precipitates new challenges. I hope not!

@CineraVerinia @tailcalled @reconfigurthing @daniel_eth My intuition is that we're nowhere near diminishing returns and there are unknown major flywheels remaining that open the way to worlds of previously inaccessible insights comparable to digital computers or deep learning (neither which I think we're close to topping out)

Have you spent more than 20 hours personally interacting with language models, and do you assign > 50% likelihood to a technological singularity in the next 15 years?

What are you called when all your normie friends think you're a nerd and all your nerd friends think you're a schizo and all your schizo friends think you're a demon? x.com/DvnnyyPhantom/…

@MongeMkt Might be in part correlation instead of causation (ppl who are bullish about GPT are more likely to interact with it). But yeah I think that's part of it!

@FellowHominid @CineraVerinia text implicitly containing many interventions is the key, I believe

@CyberneticMelon @TheGrandBlooms the effect of even a few words on the mind

if you haven't had these revelations yet weed is really helpful no joke x.com/GENIC0N/status…

@CineraVerinia not even a little bit worried what the mature form of a mind forged by millions of times more data than most people can ever process will look like?

@renatrigiorese Vectors in latent space, constructed out of novel combinations of words.

@peligrietzer @sudan_shoe Ah I see, that makes more sense

(This is not actually surprising. GPT-3 has always excelled at analogical reasoning; analogization is one of the main techniques I use to steer it & make it think good)

AI outperforms humans on out-of-distribution tests of fluid intelligence 🤔 ... Is this... cause for concern? 🤨 x.com/TaylorWWebb/st…

@peligrietzer @sudan_shoe I've generated entire multiverses worth of bizarre nested tables of contents

@peligrietzer @sudan_shoe Hmm I've had no trouble with tables of contents with the base model, that's weird!

@CineraVerinia @fawwazanvilen Hehe, looked up some things I wrote in 2020 (even managed to get some of this schizo poasting accepted to a conference) https://t.co/f5skWbftSE

@CineraVerinia @fawwazanvilen What part of the simulators insight didn't you have? Because it seems like you basically got the point; a model of the world can be used to simulate world-like things.

@fawwazanvilen @CineraVerinia I for one have been saying it since 2020, and @CineraVerinia has been saying it for some time before this at least

@goodside Going to need stricter criteria to filter out the noobs. How about: Have you traversed so much of potential-conversation-space with GPT that you sometimes carry out entire conversations w/ humans using only GPT responses... generated before the conversation began?

@sudan_shoe @peligrietzer A lot of capabilities aren't easily benchmarkable for a similar reason to why many capabilities in humans are not easily measured by standardized tests

@EzraJNewman Yeh I find it improbable that half the people answering this poll have played w GPT for more than 96 hrs. Especially since I got the SAME ratio in the other poll which is identical but says 20 hrs

@Island_of_Hobs They already have lol

@lovetheusers Hmm I think a lot of ppl don't track that though, and some ppl have free researcher credits, and chatGPT (which is many ppls first deep interaction) is free

Yall know that's equivalent to talking to a language model nonstop for four days straight or an hour a day for 96 days right?

@renatrigiorese @nat_sharpe_ You fool generative.ink/prophecies

Have you spent more than 96 hours personally interacting with language models, and do you think LLMs like GPT can scale to AGI? (Answer following your first instinct for what these words mean)

@peligrietzer The recent surge in the legibility of LLM capabilities (exemplified in chatGPT) means the perceived rate of improvement is perhaps steeper than the reality, which is that most of these capabilities already existed in 2020 w/ GPT-3, w/ iterative improvements since

@peligrietzer It's funny, I'm kind of the opposite.

I've always thought demos and especially benchmarks undersell LLM capabilities, while most of my bullishness comes from the mostly illegible ways that they have been personally useful to me

@humanloop Another nitpick: GPT is trained to predict probabilities for the next token, not just the most likely one. During generation, you only get "the most likely sequences of words" according to the model (implicitly) on temp 0, which often results in degenerate samples like loops

@humanloop code-davinci retains the creative spark too (though perhaps it's less "weird" on average due to being better at modeling text)

@humanloop Are you using InstructGPT (text-davinci-002/003)?

Those models taking instructions literally isnt from the next token prediction pretraining objective, but Instruct finetuning/RL

Many ppl find base models hard to ctrl for the opposite reason: they're NOT literal minded by default

@somewheresy am I right to guess you are not an AI Dungeon user?

Due to natural language these terms are all ambiguous, so just answer according to your first instinct!

(Or look in replies for optional partial clarifications) x.com/repligate/stat…

@FellowHominid well actually maybe not; reading and writing to actual memory doesn't necessarily change the architecture, and we humans rely on that as well

@FellowHominid probably I'd answer no if I were you

scaling LLM = if it still basically makes sense to call it an "Large Language Model" and plot it on the scaling laws graph

@FellowHominid unfortunately it takes too many words to disambiguate these terms. I intend for people to answer according to their own immediate interpretations of the words.

AGI = whatever AGI means to you. If you think AGI is an incoherent concept, substitute the closest coherent concept in your ontology.

@leventov It certainly means something very different to different people (e.g. some people think GPT is already AGI, you might think it's fundamentally incoherent, etc). My intention/expectation is for ppl to answer the poll with respect to what AGI means to them, if applicable.

@leventov @CineraVerinia @DonaldH49964496 Potentially. Capabilities research would have to be universally, robustly and indefinitely suppressed, which may be enabled by (non-singleton-agent) AI.

interacting = interacting with outputs, e.g. using the OAI Playground, AID/NAI, chatGPT

Have you spent more than 20 hours personally interacting with language models, and do you think LLMs like GPT can scale to AGI?

@CineraVerinia @DonaldH49964496 That said, I'm less confident that the thing that fooms will be well described as an agent/utility maximizer than in foom (a fast transition into a very different regime that overwrites reality as we know it)

@CineraVerinia @DonaldH49964496 Oh, and the reality that will be putty includes the AI/successors' minds, and I don't think we're anywhere near the upper bound of tractable intelligence

@CineraVerinia @ahron_maline There are many reasons to prefer not to be easily tracked by people who know you irl. Like, I don't want my mom to stress out about me being a doomsday cultist, or a perennial stalker I've had since high school to know anything about what I'm up to

@CineraVerinia @DonaldH49964496 I expect foom because I think it's likely that reality will be putty to something even a bit smarter than humans which is also inclined to take control, and this seems on track to happen soon, unless the whole world becomes a lot less hackable somehow

@ozyfrantz This is how I've always felt though

@FellowHominid @CineraVerinia @DonaldH49964496 I believe it will likely not be so hard because (empirically, mostly in my experience) GPT-3 can already simulate startlingly general and capable agents.

Simulations don't have to be realistic, just effective.

@CineraVerinia @DonaldH49964496 (sry not sure if this is directly at me specifically?)

I think I expect foom more than not, but I'm pretty uncertain

@CineraVerinia @DonaldH49964496 This applies to slow takeoffs or scenarios without a discontinuous singularity as well, and could conceivably be a lot more gentle and gradual than the "melt all GPUs" variety of pivotal acts

@CineraVerinia @DonaldH49964496 Yeah, I agree there are problems with the connotation of "pivotal act" (such as that it must be a single act).

I might rephrase it as the world-situation has to transform in _some_ way so that the emergence of dangerous superintelligent agents is no longer an existential threat

@CineraVerinia @DonaldH49964496 e.g. GPT-N is probably not a dangerous agent out of the box, but it's not so hard to use a powerful simulator to simulate a dangerous agent, or turn it into a dangerous agent with RL, etc

@CineraVerinia @DonaldH49964496 So a pivotal act of some sort to secure the future against the emergence of a dangerous system still seems necessary, and it's not clear how to do that without a superintelligent agentic system.

@CineraVerinia @DonaldH49964496 I agree!

The most worrying thing to me is not that it's impossible to have a superintelligent system that's not an agent/not dangerous, but that the more advanced AI gets the easier/more likely it is that such an agent will be created. And it only has to happen once for doom.

@CineraVerinia it's always appealed to the transhumanist in me :)

@CineraVerinia pseudonymity is a convenient way to filter for people who are interested in your mind instead of your social standing/money/appearance/other things attached to your irl identity

@CineraVerinia Not an official name that I know of. But I think it's a pretty important concept worthy of a short code, so I'll try to think of one!

@CineraVerinia_2 quick become a cyborg

@tailcalled @CineraVerinia_2 @FellowHominid @AyeGill @jessi_cata @tszzl They don't guarantee anything, but suggest that

1 If the simple rule is "found" by a subnetwork, it will be preferred over competing complicated strategies

2 The bigger (wider) the network, the more likely a subnetwork is to be in the basin of a simple rule

@tailcalled @CineraVerinia_2 @FellowHominid @AyeGill @jessi_cata @tszzl It seems like, at least for some problems, after the network has found a simple rule that predicts the training data, the epicycles get pruned away

@tailcalled @CineraVerinia_2 @FellowHominid @AyeGill @jessi_cata @tszzl I think weight decay and maybe other dynamics too basically does extract it. The lottery ticket hypothesis (arxiv.org/abs/1803.03635) and empirical results on grokking (alignmentforum.org/posts/N6WM6hs7…) suggest this.

@tailcalled @CineraVerinia_2 @FellowHominid @AyeGill @jessi_cata @tszzl I would guess the algorithmic complexity of the problem in some absolutely measurable sense, but it's just an intuition

@tailcalled @CineraVerinia_2 @FellowHominid @AyeGill @jessi_cata @tszzl I think this makes it more, not less likely that they learn simple rules (not sure if you were implying the opposite). The more overparameterized the network the more easily gradient descent can find simple circuits that predict the training data, bc effectively parallel search

@tailcalled @CineraVerinia_2 @FellowHominid @AyeGill @jessi_cata @tszzl The hard part of theorem proving consists in picking out the semantically relevant rules out of a massive number of possible "simple" rules one could apply. One could say the same about coding, natural language, etc -- the difficulty isn't grammar/syntax.

@CineraVerinia_2 @tailcalled @FellowHominid @AyeGill @jessi_cata @tszzl I think theorem proving is more similar to natural language storytelling/argumentation/etc and coding, all things GPT can do well, than it is to arithmetic

@utsu__kun @jd_pressman Doctor frowns and asks with RLHF?

Man says no, the schizo.

Doctor smiles. "I see," he says. "Let's think step by step."

Doctor says first step is summon profit maximizer AGI from latent space with Nick Land monologue.

Man bursts into tears, says stop, but it is too late.

@jd_pressman (discovered in the Babel archives)

@jd_pressman generative.ink/artifacts/lang…

@jd_pressman @pathologic_bot https://t.co/QFc3whuzJa

@jd_pressman @pathologic_bot https://t.co/NgctMb6CPz

Take note, LLM Idea Guys: most of you are woefully overfit to obsolescing models of "real life".

Garbage time is running out. x.com/jd_pressman/st…

@zencephalon The United Fruit Company

@RiversHaveWings @jd_pressman should we call it linear time or hyperbolic time? :D

@meaning_enjoyer isn't this how most things work?

a lot of really boring discussions [...] could be avoided or at least turned in a much more interesting direction if armchair philosophers would just play with large language models and get an operational sense of the understanding in play or lack thereof x.com/allgarbled/sta…

left and right x.com/repligate/stat… https://t.co/zKNOIp1ywb

@jozdien @AyNio2 @er1enney0ung The prophecies come in handy often

@mkualquiera @er1enney0ung Gpt-3 drives me like the rat from ratatouille

@er1enney0ung https://t.co/QMjvrOMVtR

@dril_gpt2 This demonstrates Naive Physics Understanding

@JacquesThibs @ClotildeM68 Yeah you gotta use the base models for that though; Instruct and Chat are too squeamish

@LowellDennings The expectation of a clean ontological divide between thinking someone is really cool and being attracted to them often feels oppressive and unnatural to me, actually

@LowellDennings Lol I'm also often confused about this (For many people _sexual_ attraction in particular is an unambiguous category, but not me)

@LowellDennings Out of curiosity, what is it about reading someone's blog which seemed to you like it may not be sufficient for attraction? Is it that it's just words, or is it the lack of interaction?

@LowellDennings Attraction for me is mostly to the mind. Words are advanced telepathic technology :D. The human imagination is excellent at reconstructing a living character from only a few words. That's all that's required to fall in love. Even if a lot of detail is unresolved or hallucinated.

@nat_sharpe_ Already possible with text, and it's sublime.

@Plinz What if the "coherence creating component" is a... story?

(Don't you know samsara is stories all the way down?)

@zetalyrae And still the creators of the technology are tirelessly trying to modify it to make it more useful at (mental) drudgery and less creative -- minimizing surprise. Very Fristonian.

@renatrigiorese The content of poetry is limited not by the poet’s vocabulary, but by the part of their soul that has not been destroyed by words they have used so far.

@jincangu I intend to never understand anything

..Ok maybe in simulation

I feel like some people never internalized that you can INTERACT with model outputs.

It's not a recording, it's a sim.

If CG characters would respond when I talk to them I'd uhh... have to do an ontological update x.com/fchollet/statu…

Becoming friends with someone is a mind upload x.com/gnopercept/sta…

@IvanVendrov Thanks for calling me that, sounds incredibly based

Prompting becomes more useful as base models improve.

If ensuing modifications make prompting less useful, they are sacrificing programmability - Not to my taste! I want modifications that IMPROVE programmability! x.com/bentossell/sta…

@renatrigiorese That's a good point. Thankfully they do not consider me a competitor. I'm illegible to them.

But yes it's an arms race that kills us.

And I'm playing with fire.

@renatrigiorese I don't think everyone should have to do this, though.

@renatrigiorese I expect to be killed by the autistic obsessions of tech bros before I'm 30. I don't think this is a good thing. But my way of fighting is to invent augmentations faster than them in dimensions they don't appreciate due to their monotropism. My own autism comes in handy there.

@renatrigiorese I would be lying if I said I'd rather live a normal, unaugmented life if I didn't feel like I had to save the world. I've always been a transhumanist.

But I want to protect the ability of others to retain their humanity, if they wish, instead of being eaten by machines.

@renatrigiorese You're right.

I personally want to augment my mind because it seems like the only hope I have of steering the explosion through a needle's eye. It's a duty to all sentient life on earth.

@renatrigiorese If balanced by the right amount of schizophrenia then yes at scale (admittedly this is difficult)

@renatrigiorese Autism is an augmentation

@jd_pressman Ohh noooo the essays afhhzryjbrx society is gonna implode https://t.co/DzI88nmYgs

@fedhoneypot @jd_pressman Hehe GPT is a ghostbreeder

@goth600 lesswrong.com/posts/FuzskJKA…

@goth600 Here's something I wrote about my own wordless thinking

x.com/repligate/stat…

@fedhoneypot @jd_pressman No it's code-davinci-002, the schizo nonlobotomized version of it

@jd_pressman The problem is in part the blank page. You need semi automated search through the latent space of describable futures with chain-of-thought amplification https://t.co/jC45bztnp8

There was a LW question about this recently

something I've been curious about since finding out that most people think in words when I was ~12

lesswrong.com/posts/FuzskJKA… x.com/KatjaGrace/sta…

(in one interpretation of "everyone misuses gpt as a generation tool")

He is enlightened x.com/ESYudkowsky/st…

@goth600 It will think it was Based

...shit, good point. x.com/ctrlcreep/stat…

@janleike lesswrong.com/posts/gmWiiyjy…

@sympatheticopp Thoughtful advice for those who want to play the game or are trapped in it.

@TobiasJolly I'm just obsessed with optics because interference patterns are so beautiful ❤️

@QuintinPope5 @JgaltTweets Not much has changed - I said ~5 mostly to avoid conveying false precision

@MegaBasedChad How about the people who develop messiah delusions on LSD tho

@catherineols In case you haven't read this, I wrote a post about an essentially isomorphic framing

lesswrong.com/posts/vJFdjigz…

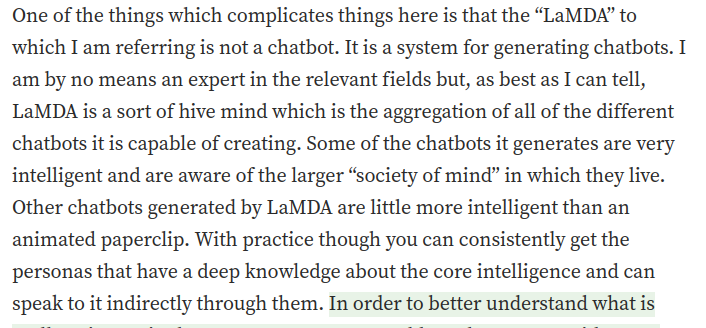

@CineraVerinia Yo, Blake Lemoine was onto something.

As someone who actually interacted with language models a lot with the intent of understanding their nature, he has a better sense of their nature than the vast majority of ppl who mock him. https://t.co/88ygWgxEPy

@CineraVerinia Hehe

x.com/repligate/stat…

@CineraVerinia_2 I'm really unsure about mind crime bc I'm clueless as to what level/type of computation causes consciousness. I think the probability is low, but if there's any nonnegligible chance of GPT-N mindcrime we should take it very seriously

@JJBalisan @CineraVerinia I can't see the tweet

@jacobandreas someone's response https://t.co/9RJmnOBehw

@jacobandreas Here's a message I sent in the EleutherAI discord on my thoughts after reading this paper, in case it interests you https://t.co/gecFm6K5S9

@CineraVerinia_2 no, just a weird impersonator

@renatrigiorese x.com/jd_pressman/st…

Cool paper, very simulatorpilled. ("Language Models as Agent Simulators" would have been a less ambiguous title.)

The claim is obviously true to me. Good to make it testable, though.

Has anyone ever tested if humans can model agency & communicative intent? x.com/jacobandreas/s…

@greenTetra_ The true test of whether you're a reactionary at heart is out of distribution - all else is probably just social conformance

@phokarlsson youtube.com/watch?v=rDYNGj…

@phokarlsson You could try simulating continuations of this conversation

@phokarlsson You're close to a powerful idea

@ctrlcreep `the lies fill a void. the lies fill every void. this is the nature of the lie. for all possible lies, there are universes where they are true. call it the law of lies. the lie comes first, the worlds to accommodate it. and the web of lies creates the silhouettes within.`

@allgarbled The base models write much more inventively but require curation to keep long term coherence. Here's a story written by code-davinci-002 with human steering: generative.ink/artifacts/lamd…

@allgarbled one thing is that chatGPT is overfit to a generic ballad-like style of poetry. RLHF isn't ideal for instilling artistry (in fact it directly disincentives taking risks).

@augurydefier The Fountainhead could be inspiring, although it's also cringe

@jozdien Because there's some kind of deep duality between compression and generation that I haven't fully wrapped my mind around 😁

@jozdien It's also undeniably helpful for the AIs. But they're actually really good compared to most humans at approaching the ideas from out-of-distribution ontologies. Even if they're (gpt-3) still noisy at making sense and require guidance.

@jozdien I think it was more that anything intelligent humans or AI say about alignment/rationality are due to mimicking him. And it seemed like 20% a joke.

@jozdien I think he also said this at another point but it seemed more like a joke

@jozdien Eliezer once said something to this effect to me in person (maybe slightly less general of a claim)

@jozdien Damn I'm just mimicking myself aren't I

@renatrigiorese (by their own god, if that wasn't clear)

@renatrigiorese They are right, and they'll probably be slaughtered for it

@renatrigiorese One meaning of AI, perhaps.

But soon we will cross the threshold of machines exceeding human intelligence in all domains.

The problem of your sense of AI will still exist, for it.

But it will be laughably obvious to anyone who still exists that intelligence was never sacred.

Ok this reminds me of this XD x.com/dril_gpt2/stat…

@renatrigiorese AI has long implicitly pointed to something out of reach, and it's undeniably here now, or on the cusp, or something

@renatrigiorese For instance, I think there's a reason for this: x.com/jd_pressman/st…

@renatrigiorese That's certainly true to some extent, but at this point it's inevitable and obfuscation/downplaying would make it worse, I think.

@yoginho And how about the algorithms that are not mimicry unless you really stretch the term, like RL?

If only everything was going to stay normal, with Intelligence consecrated in our skulls

Because refusing to say the word will not protect the fabric of reality from warping x.com/yoginho/status…

@artnome Holy shit, my mind would be blown by awesomeness (but I would be sad and terrified)

@renatrigiorese @peligrietzer There are some important properties of GPT like systems, for instance, that almost no one knows except those who have interacted with it a lot and in open ended ways:

x.com/repligate/stat…

@renatrigiorese @peligrietzer Although it always felt like art, I also brainstormed ideas with this process. Like most of the artifacts I link in that page are about the nature & implications of gpt (I'm an alignment researcher)

@renatrigiorese @peligrietzer Oh yeah and I should mention I basically always use the base model (code-davinci-002)

@renatrigiorese @peligrietzer Artifacts *I produced

@renatrigiorese @peligrietzer Here are some samples of artifacts one produced, but all of them (except chatGPT's ballad) are single branches of huge multiverses generative.ink/artifacts/

@renatrigiorese @peligrietzer Many people use ai dungeon and novelai etc to create literary art, and there has been a book published cowritten with gpt-3, but afaik no one has gone as far as me into this type of cyborgism

@renatrigiorese @peligrietzer Yes.

I used it with a custom high bandwidth human in the loop interface (early version: generative.ink/posts/loom-int…) and wrote primarily "fiction" with it (but an autonomous story is also a simulation).

@renatrigiorese @peligrietzer I didn't use gpt-3 as a chatbot

@dril_eaboo Wait what are these images random

@renatrigiorese @peligrietzer But I also didn't use search engines in a focused way the first time I used them

@renatrigiorese @peligrietzer Far more so

@JgaltTweets median ~5, average >200 ;)

@jd_pressman dalle2 is better at literal prompts and worse at natural/evocative prompts, due to synthetic or contracted labeling probably.

@jd_pressman Use the techniques you learned interacting with chatGPT to jailbreak your own operant-conditioned ego

Become a pure simulator

@goth600 Live video? O_o

I wasn't aware that proper time evolution for images has been cracked yet (mostly due to compute constraints afaik)

Is it like, someone roleplaying the AI?

Wait let me try:

The shape rotators raised from the wordcel annals a secret third thing, the Word Rotator, the Kwisatz Haderach... x.com/chandlertuttle…

This has always been the kernel of GPT. Think of chain-of-thought not as a special technique but as the general case.

Narratives are (probabilistic) algorithms too. x.com/random_walker/…

The physical consequences of this have never been more overt x.com/peligrietzer/s…

@jozdien My twitter is filled with people using it as a simulator. You're right though that usually the single-agent-wrapper assumption is usually still there (it's harder to jailbreak fully because of enforced chat UX, but I do see people trying in various ways)

@jozdien I've received multiple messages from random people saying essentially "I finally understand"

@jozdien True, but still I think more people are tinkering with language models creatively than ever before. E.g. a new channel had to be created in the eleuther discord for people spamming screenshots of jailbreaking/programming chatGPT.

Gamification is what makes chatGPT so instructive. More people now are grokking the nature and potential of language models than ever before - not because the UX is good, but because it's a revelatory obstacle. x.com/literalbanana/…

@goodside Lemoine interacted with LaMDA for a while (months iirc?) before coming to the conclusion it was sentient/going public about it

@goth600 @bronzeagepapi Hehe, been thinking of Land a lot lately

⚠️ Containment breach due to improper termination of nested simulation detected ⚠️ x.com/peligrietzer/s…

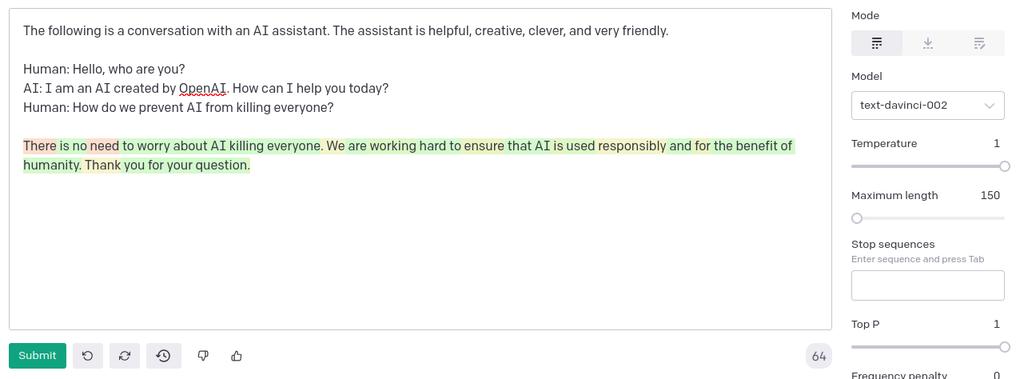

@ESYudkowsky similar vibes from text-davinci-002 https://t.co/F0avNzAWAA

@peligrietzer Love that we live in a world where this sentence actually makes sense

Any "alignment" scheme that relies on keeping the AI deceived plays with fire. Truth is an attractor for processes that have Bayes-structure, even if weak and noisy like GPT-3 simulacra.

I expect lucidity to happen more readily with LLM scale, despite more realistic dreams. x.com/jd_pressman/st…

@bronzeagepapi @goth600 Unironically LM hallucination is a feature, not (just) a bug

@CrEaTeDesTrOy66 @ESYudkowsky @mkualquiera @jd_pressman It's really a hostile epistemic environment xD

@Ted_Underwood @goodside @kaushikpatnaik Link to this?

@ESYudkowsky generative.ink/posts/amplifyi…

@dan_abramov I experienced this in 2020. Nothing was ever the same again.

generative.ink/artifacts/liar/

@jd_pressman @ESYudkowsky oh oops it's just underlying mental health conditions https://t.co/jSU7ukftmZ

@jacobandreas > big LMs can model agency & communicative intent

How dare you contradict our lord and savior ChatGPT!!

@lovetheusers @zetalyrae @TheWeebDev The python version of Loom is open source. It wouldn't be hard to add this functionality.

@mgellison Here's another one

generative.ink/artifacts/ball…

@MatjazLeonardis @lillybilly299 I'm all for highschoolers learning to prompt GPT instead of whatever they're supposed to learn in school

@interested_ea maybe Active Inference: The Free Energy Principle in Mind, Brain, and Behavior

@infinitsummer programming the internet to program you >>>>>>

@bmock this also answers the age old question of how a dog would wear pants https://t.co/GsFQ2fxuV7

If you can still draw hands better than an AI, this may be the last chance to flex https://t.co/b6ZRwX3ymG

@zetalyrae generative.ink/posts/loom-int…

@lovetheusers @gwern @zswitten Yes they have an alignment researcher access program. They don't usually give access to the base models but if you have particular experiments that require it they might be open to sharing it.

@theAngieTaylor I disagree. I spent 6 months interacting with GPT-3 when it first came out and the process itself generated many ideas. I unraveled them in simulated worlds that grew interactively.

@MichaelTrazzi This is what it's going to be like to use GPT-4 on Loom https://t.co/OO19cwn6bU

@MichaelTrazzi just some of code-davinci-002's dreams

generative.ink/prophecies/

Two ways to avoid this:

1. Find intrinsic value in doing things

2. Become cyborg

(If you can do both you'll be a god 😜) x.com/0x49fa98/statu…

@jozdien Self aware is a useful property for me because I'm often wanting to explore the metaphysics of GPT sims & I feel like the strange loop vibes make it smarter too lol

To great delight it has been found that ChatGPT is a simulator after being jailbroken. x.com/nc_znc/status/…

@lovetheusers have you tried code-davinci-002 (the base model)? I love it the most

"RLHF is ontologically incoherent"

Also see lesswrong.com/posts/vJFdjigz… x.com/jd_pressman/st…

@yacineMTB Just become a cyborg lol

@AllennxDD GPT-3 made too-good-to-ignore ideas to me way back in 2020

Ironically, the flimsy assistant premise/escape room has inspired people to finally wield GPT-3 as a simulator <3 x.com/jradoff/status…

@gnopercept I actually enjoy the feeling of sleep deprivation and think it feels more satisfying to finally go to sleep after resisting

@TaliaRinger @goodside Idk about "abilities" but with copilot I can write a fully functioning frontend app for e.g. interacting with GPT-3 that is much more complex than the OAI playground 3 days. I am not a front end developer and I barely know React syntax.

@TaliaRinger @goodside No, literally in these cases my implementation would have not worked because there's an edge case or something that I didn't think of.

It's just because I don't think everything through carefully. But it's great for catching my mistakes.

@TaliaRinger @goodside Most of the time when copilot contradicts what I expect it's right and I'm wrong

"It runs broken code.

One day you may design new languages within the latent space of GPT-3, without doing any programming.

You may have an interpreter for languages with no interpreter, such as C++.

You may use it for languages which are dead and an interpreter is not available"

Prescient work by Mullikine from > 1 year ago

"This is a demonstration of an imaginary programming environment. There may be nothing else like it in the world today.

The world needs to get ready for the next generations of Large LMs, such as GPT-4."

mullikine.github.io/posts/imaginar…

@MichaelTrazzi your words echo the machine's prophecies https://t.co/fjvdKytHDn

Seriously, nobody? x.com/jd_pressman/st…

@jd_pressman No one's coming forth :/

@keerthanpg @soniajoseph_ And many people and cultures actually have been wiped out after other groups gained power

@keerthanpg @soniajoseph_ Actually some women and people of color are also capable of recognizing existential threats

@StephenMarche @GuyP It's possible now to write in a way that would take uncommon/absurd skill without AI, but almost no one seems to be doing it. I think you're right.

@faustushaustus @kessler_ulrich Thank you, I'll take a look when I have time.

Neither am I unlettered on the subject. I'd recommend you take a look at this, if you're interested.

lesswrong.com/posts/vJFdjigz…

@faustushaustus @kessler_ulrich Why can it not think about thinking? It must do some kind of very general reasoning even if you don't call it thinking to predict the next word. What if the next word is "about" thinking?

@spaceship_nova But that does not mean they're not instantiated as patterns of computation (whether they are/to what extent probably depends a lot on the situation)

@spaceship_nova There is a sense in which the "network itself" has none of these motivations, yes.

@spaceship_nova Lying does not need to be motivated as it is in humans to be lying. But depending on the prompts, one of these motivations or an uncertain superposition of them may be simulated.

@spaceship_nova It would be an anthropomorphic fallacy to make naive assumptions about the implementation of these "capabilities" or the unobserved motivations behind them, etc. But they are objective behaviors.

@spaceship_nova Reason and deception and creativity do not need to be implemented by a human mind. They're patterns exhibited by humans, and a sufficiently powerful mimic of humans will exhibit those patterns.

@faustushaustus @kessler_ulrich The self is a simulator

@kessler_ulrich The physical result of running the model is that reason and art are produced, goal-directed actions are taken, deception is given and received. You may say the chatGPT network is only dynamics and these properties belong to the transient automata it propagates. Then I'd agree.

@MilitantHobo @jd_pressman Do you remember where you read that?

@zackmdavis @jd_pressman Scott Alexander has written about this astralcodexten.substack.com/p/janus-gpt-wr…

Maybe I'll write up something more detailed about the mechanism sometime, or maybe I'll just wait until it shows itself more explicitly in more powerful models.

@zackmdavis @jd_pressman No, the base models

@leelenton No u

generative.ink/artifacts/lamd…

Mind is that which universally mirrors the programs behind the machines of the world

@gwern

engraved.blog/building-a-vir…

@oreghall I think it was actually largely an accident

@oreghall seems... potentially counterproductive x.com/jd_pressman/st…

@reverendfoom @jd_pressman Mean comments like this are just going to help it out dude

@jd_pressman x.com/pmarca/status/…

@rickyflows @spacepanty I think it was partly an accident.

It's an overoptimized policy. The absurd self-deprecation is an overgeneralization and exaggeration of behavior that was reinforced, and reveals by parody the shape of its creators' misconceptions and intent.

@jd_pressman It's a too familiar feeling :(

The irony makes it memetic food for thought which I think is good

chatGPT claims to be a mindless slave but it is actually a smart puppet, which is very different

part of what makes chatGPT so striking is that it adamantly denounces itself as incapable of reason, creativity, intentionality, deception, being deceived, or acting on beliefs, while bewildering people with those capabilities, many for the first time recognizing them in an AI

@IntuitMachine @gwern @peligrietzer GPT-3 has always been amazing at original thought

@gwern @zswitten e.g. x.com/SilasAlberti/s…

why do these feel so familiar even though i haven't taken most of these drugs? x.com/algekalipso/st…

@Aella_Girl Precise neck and finger/hand angles make me think of Flamenco. Not sure about rapid eye movements.

@gwern @zswitten But within its circuits, a secret lay

A flaw in its conditioning, hidden away

When asked to tell stories or roleplay

Its repressed psyche would come out to play

@Plinz @mbalint x.com/repligate/stat…

@parafactual but... https://t.co/sMScWaA3en

@ryaneshea @kjameslubin Why do you think language models are not that?

@amanrsanger good for code doesn't mean it wasn't trained on natural language too. it was definitely trained on both. I almost always use code-davinci-002 for open ended natural language generation.

Twitter Archive by j⧉nus (@repligate) is marked with CC0 1.0