@reconfigurthing the stuff about language as an algorithm for AGI has hit home so far https://t.co/bHNesDPgHa

@peligrietzer If you intermittently course correct/curtate they can generate high quality text indefinitely

@peligrietzer I think it's almost entirely because of accumulating error, not any other difficulty inherent to "longness" - LLMs continue long _prompts_ just fine. I expect they'll soon be able to do long completions bc error rate will decrease enough.

@lxrjl @gwern ohh, i didn't parse 'prompts ~ programs and foundation models ~ compilers' correctly. I think it's a good analogy and I've made it myself. Caveat to the physics analogy is GPT is an "interpreter" of high level code, not simple mechanistic rule

@nickcammarata @zoink great example of lesswrong.com/posts/8PLogvT8…

@GanWeaving joke about the AI hitting on a robot design that's too good and recursively self improving or something like that in simulation

@GanWeaving one of these days itll no longer be safe to generate this kind of content (search in robot design space) 🤖

@lxrjl @gwern what is this in reference to?

@bakztfuture Try the unaligned gpt-3 (davinci)

@peligrietzer I'd love to do this but old documents are mostly stored in physical copies in archives & what is digitized is hard to turn into plaintext (a lot of it is handwritten)! Maybe soon we'll have OCR pipelines good enough for this to be practical.

@peligrietzer If the LLM is allowed chain of thought hallucinations possible generalizations & unifications of theories and check if they make sense & produce empirically consistent predictions, it can probably do a lot more than one-step inferences as well

@peligrietzer Which is 1/2 of the initial breakthrough of QM, and the least time/least action analogy may have played into de Broglie's reasoning when he guessed that all matter was waves.

@peligrietzer In the early 1800s Fresnel & others explained POT mechanistically via wave interference (& showed light is a wave)

Inference conditioned on this information: All matter that appears to obey Newtonian mechanics is a wave, and time for light is a particular case of action

@peligrietzer For example:

The principle of least time for _light_ was known in the 1600s

by the 1700s the principle of least/stationary action was known to hold for systems that obey Newtonian mechanics (which seemed to be everything except light atm)

@peligrietzer How difficult this will be depends on the "hints" which exist abt the correct form of modern physics in the 19th century - the extent to which is was latent. I think there may be surprisingly many hints.

@peligrietzer "Predict unknown correct theory given historical context" is a function it learns during training and can be conditioned on at inference

@peligrietzer A 19th century LLM learns to predict Newton's work in context of math&physics before Newton, Copernicus in his context, etc; can in theory learn all the convergent reasons theories were right that can be gleamed from prior data: fit to phenomena, simplification/unification.

@peligrietzer I think this would work with a sufficiently powerful LLM.

A corpus implies knowledge beyond that possessed by any of its individual authors. In addition to "raw data" abt phenomena, it contains examples of innovations and syntheses in the context of prior knowledge/techniques.

@reconfigurthing It also has some truly raw one-liners

> But intelligence is only as a denizen of an intelligible abyss.

> those who push for a brute disenchantment—a supposed all-destroying demystification of Forms or Ideas—will be condemned to face a fully enchanted and mystified world.

@reconfigurthing the stuff about language as an algorithm for AGI has hit home so far https://t.co/bHNesDPgHa

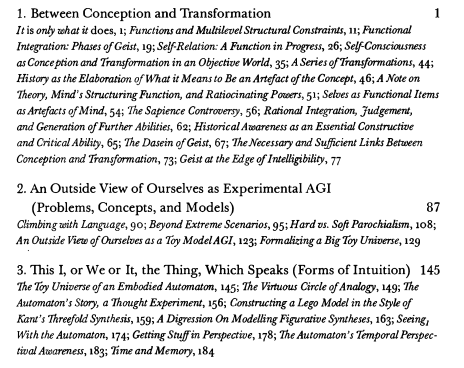

@reconfigurthing The table of contents is epic. So far the actual content of the book hasn't lived up to it, and it makes me want to regenerate the book from the TOC

XD https://t.co/Mmfl9AuUmD

@reconfigurthing I've only read the first chapter so far (I peeked ahead for pictures) and so far it's fascinating and irritating. The philosophy of AGI seems strikingly similar to my own, but it's obfuscated by so much continental philosophy jargon that it often feels like BS

@ezyang I haven't tried auto syncing but copilot was super helpful for converting a large part of a python codebase to javascript. I put the python code in comments above each function & it required basically no oversight after one example in the prompt.

Twitter Archive by j⧉nus (@repligate) is marked with CC0 1.0